34 Dimensionality Reduction

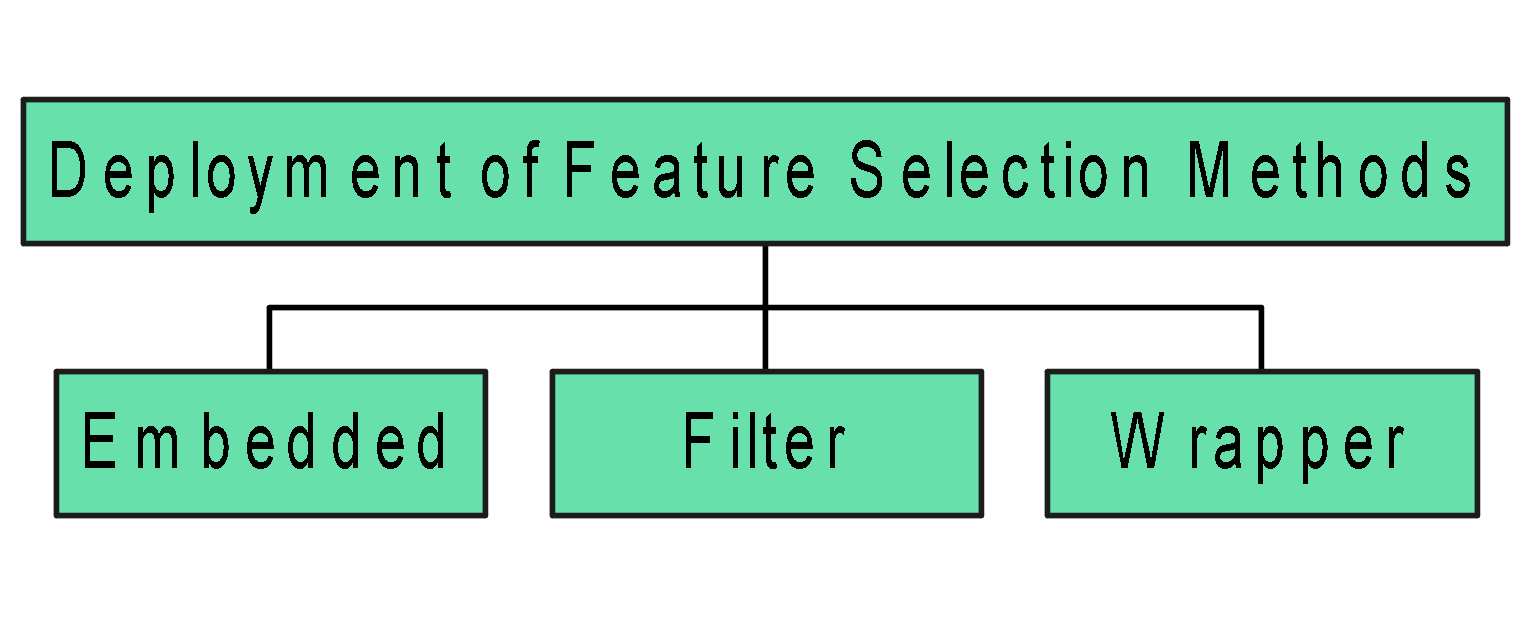

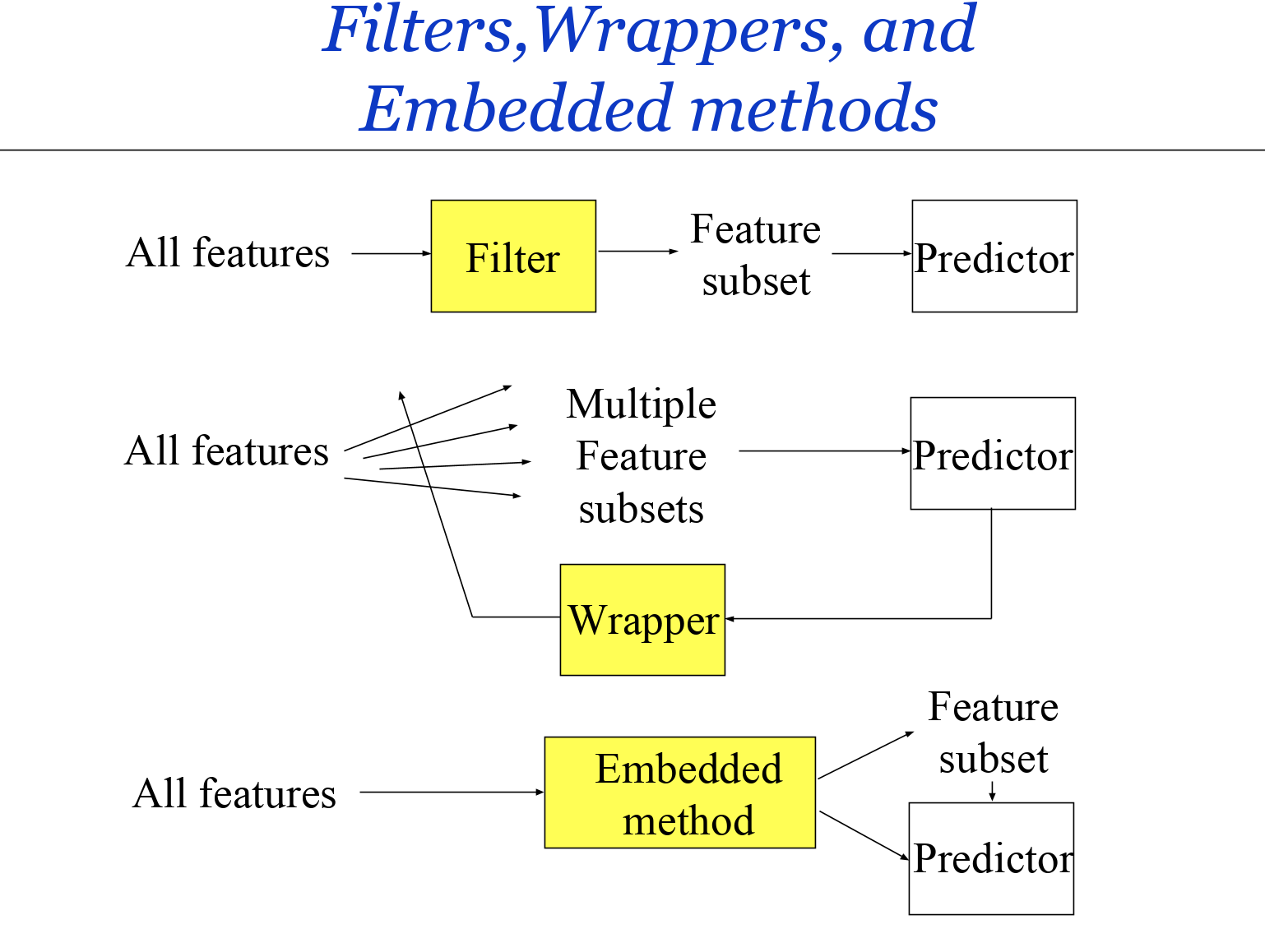

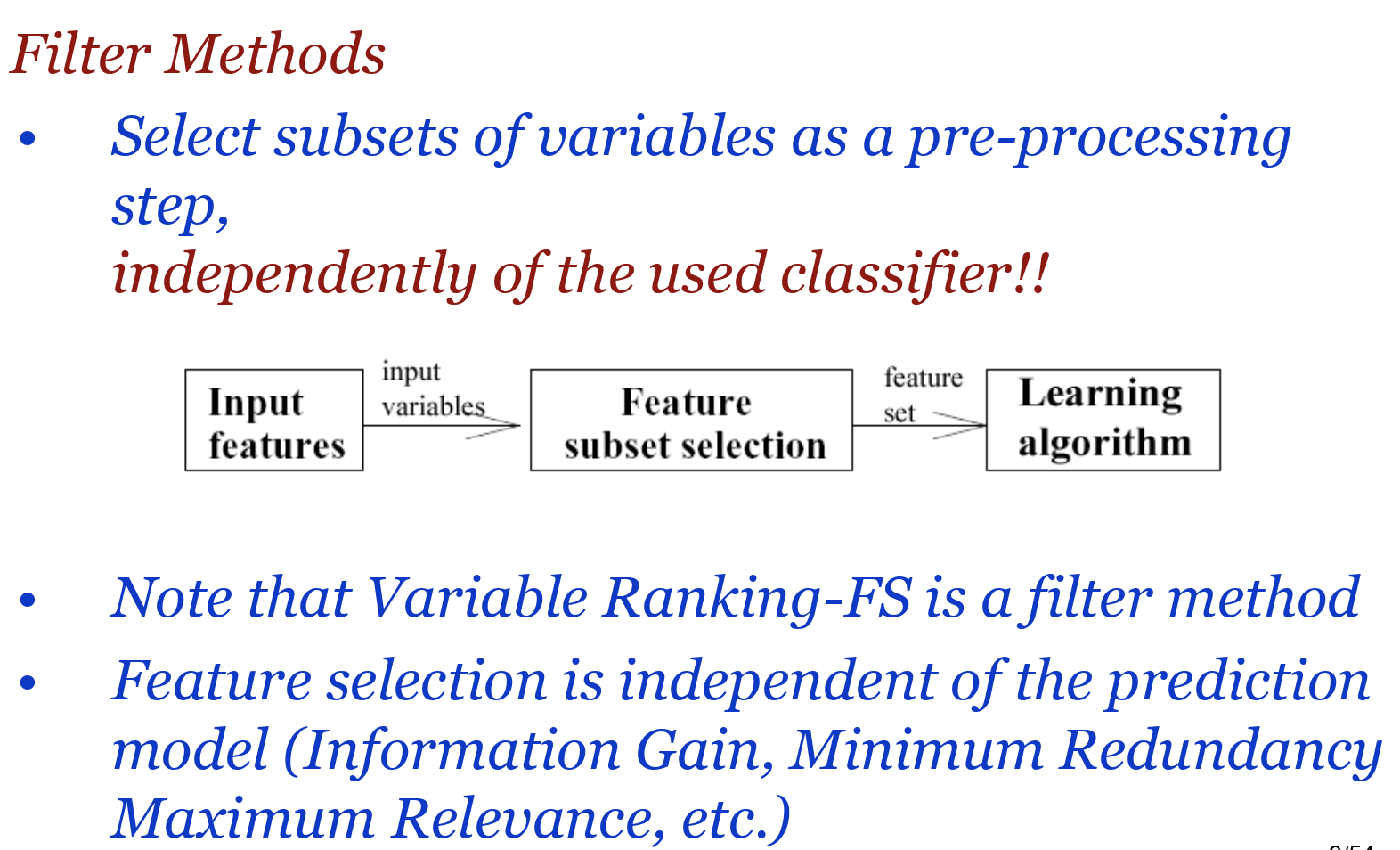

Supervised | Feature Selection

A process that chooses an optimal subset of features according to an objective function.

Objectives are reducing the dimensionality and removing the noise while improving mining performance.

Whey Feature Selection is Important?

- It may improve the performance of the classification algorithm

- Classification algorithm may not scale up to the size of the full feature set either in sample or time

- It allows us to better understand the domain

- It is cheaper to collect a reduced set of predictors

- It is safer to collect a reduced set of predictors

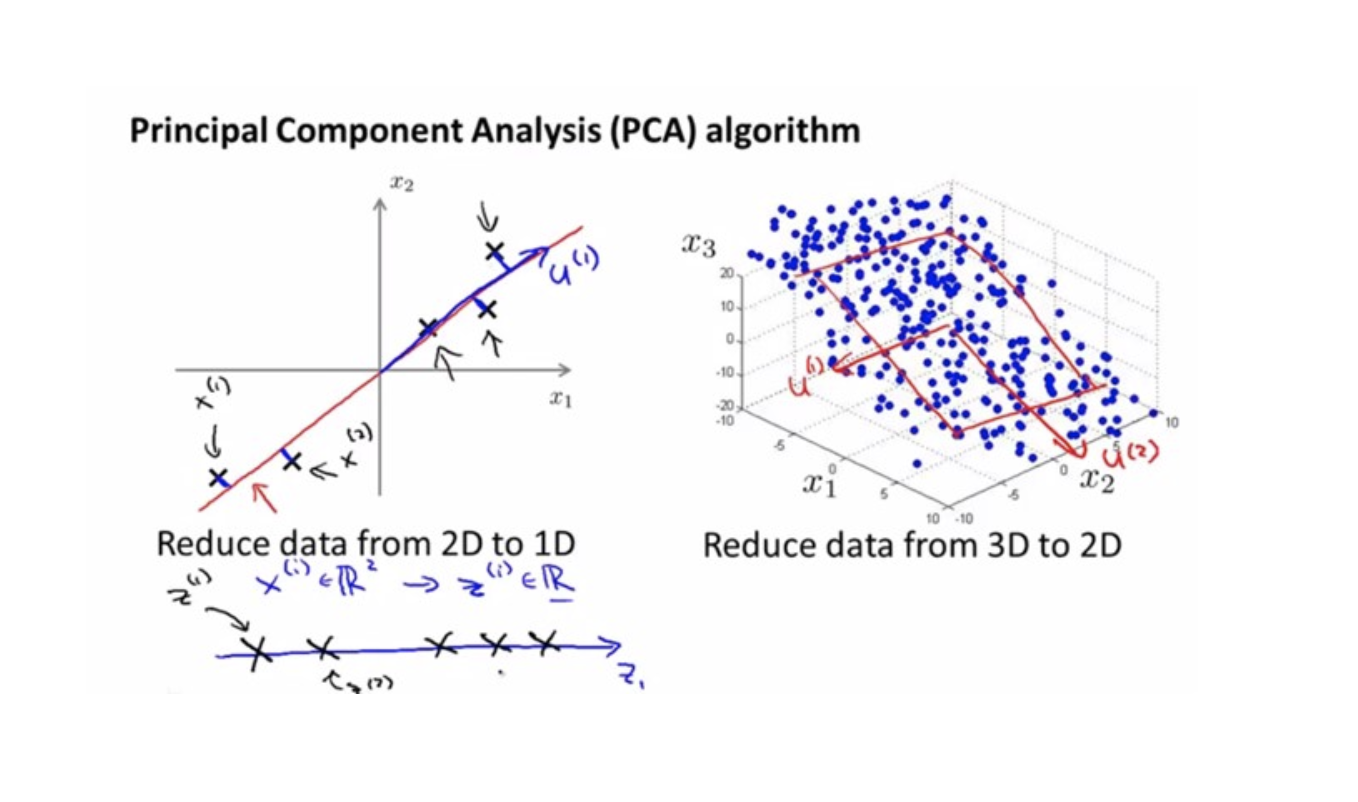

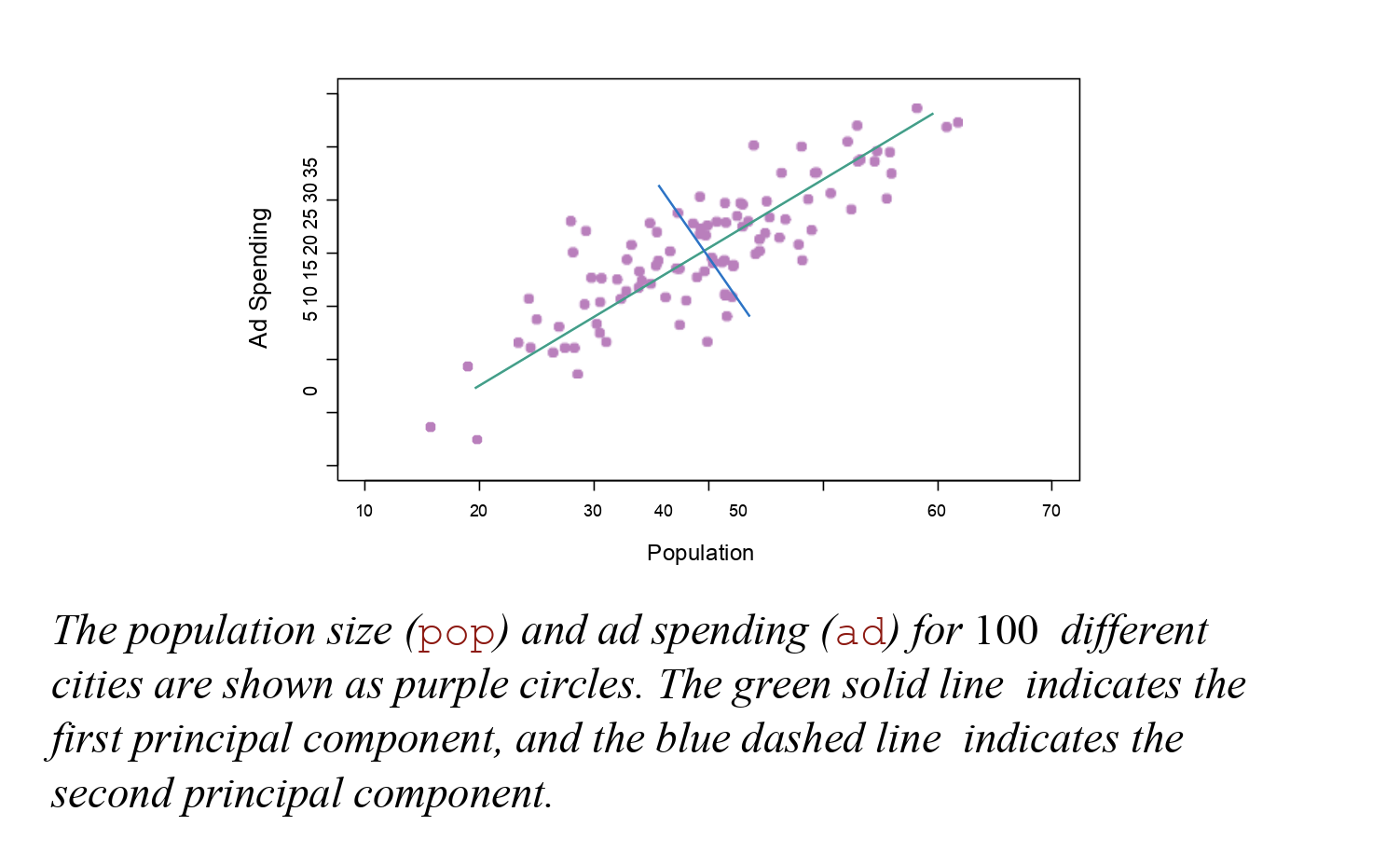

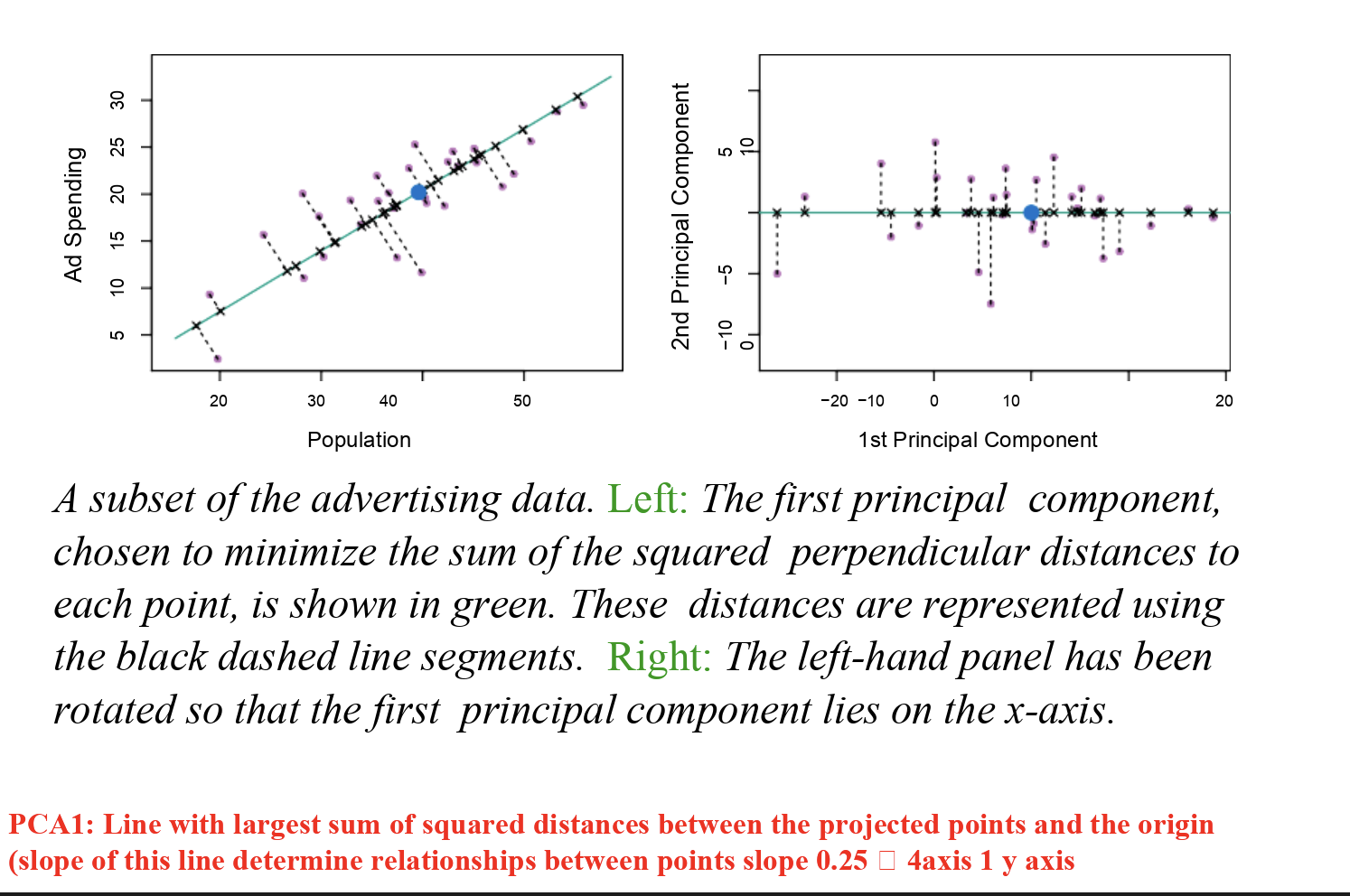

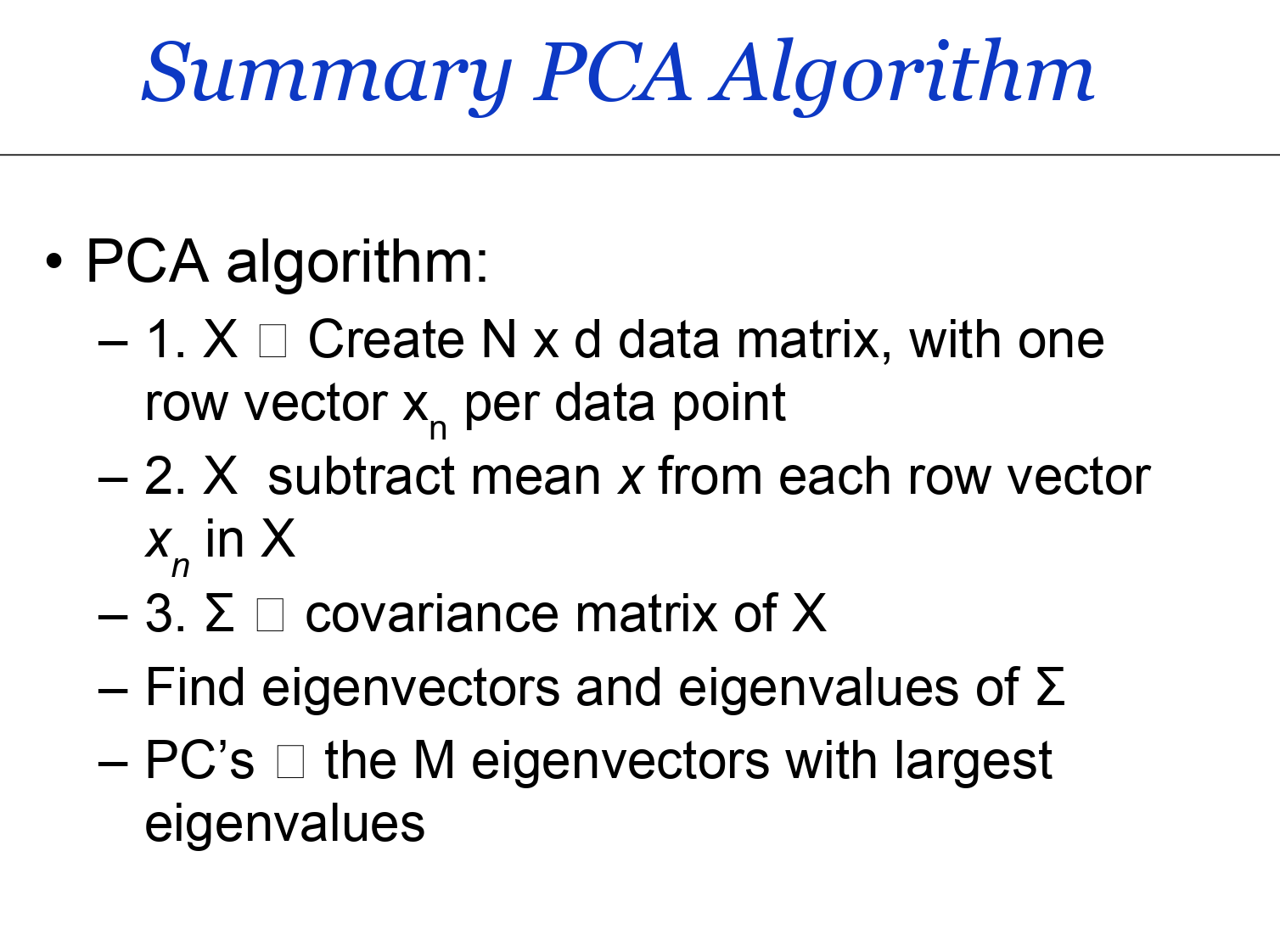

Unsupervised | PCA

A more common way of speeding up a machine learning algorithm is by using Principal Component Analysis(PCA)

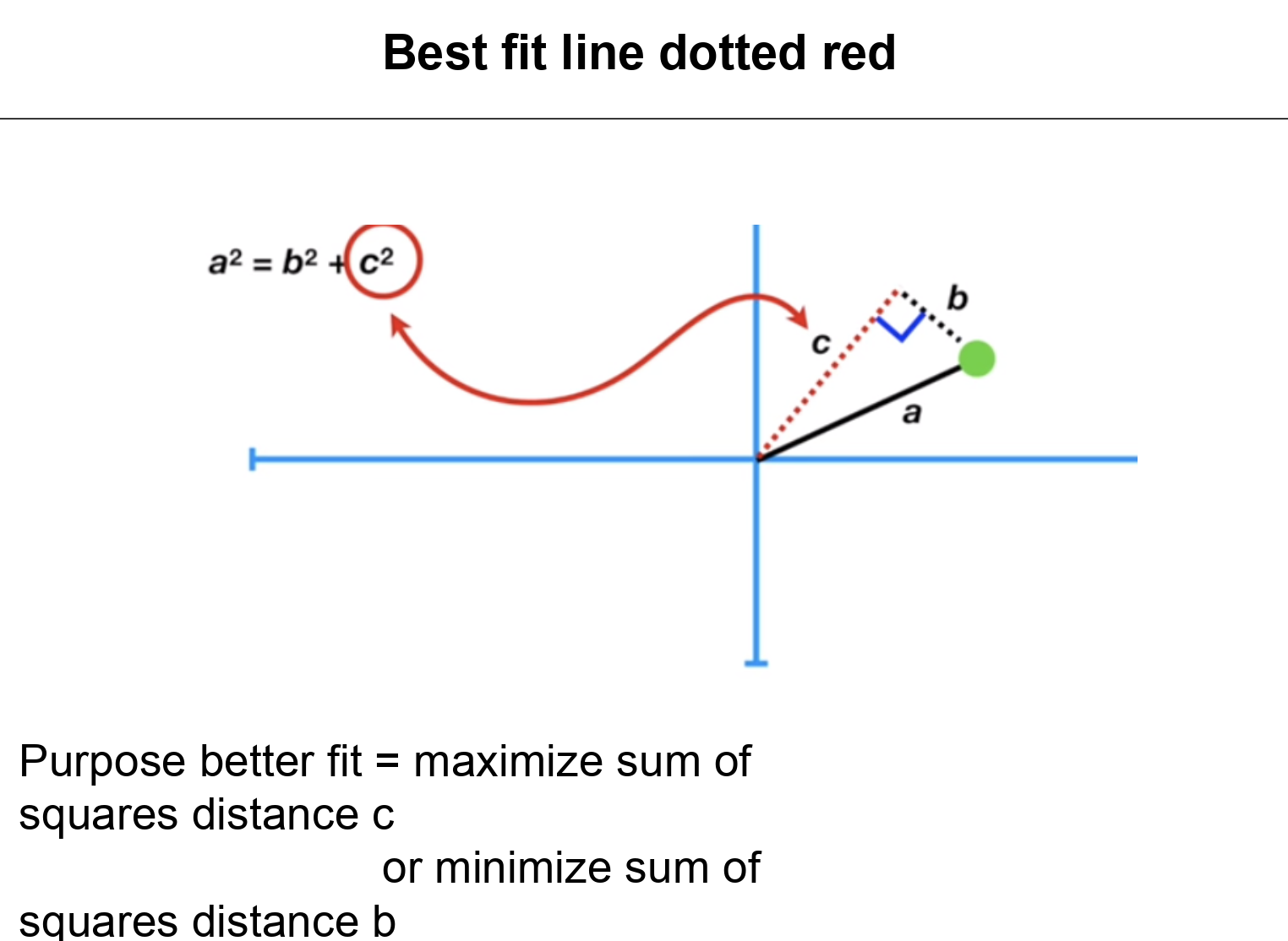

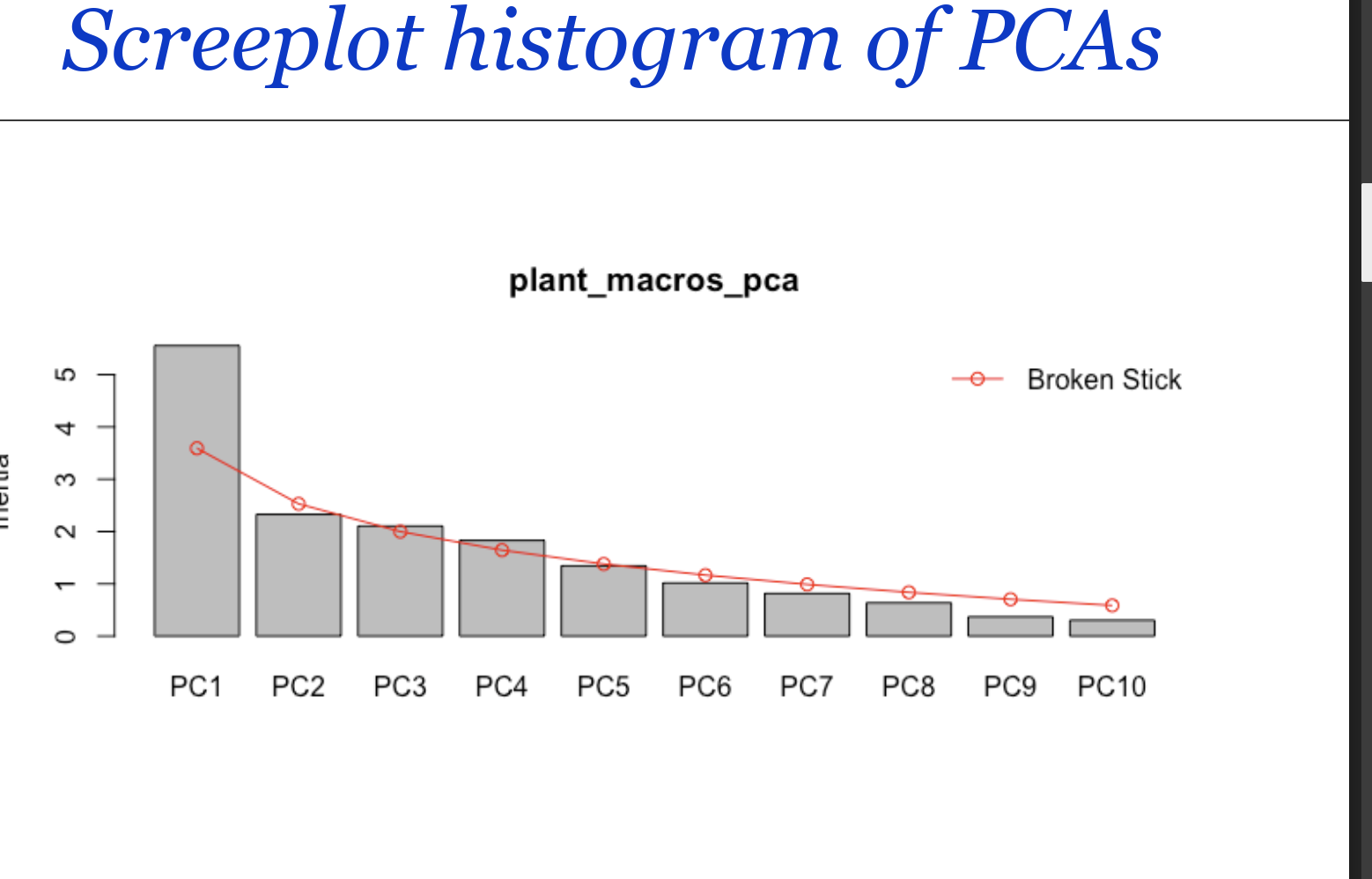

PCA reduces the dimensionality of a data set while retaining the variation present in the dataset, up to the maximum extent by transforming the variable to a new set of variables, which are known as the principal components (or simply, the PCs) and the orthogonal.

Benefits of PCA

- Reduce the number of attributes

- Find out which attribute is more valuable of separating samples

- Find out how accurate the split is

Unsupervised | Ridge and Lasso

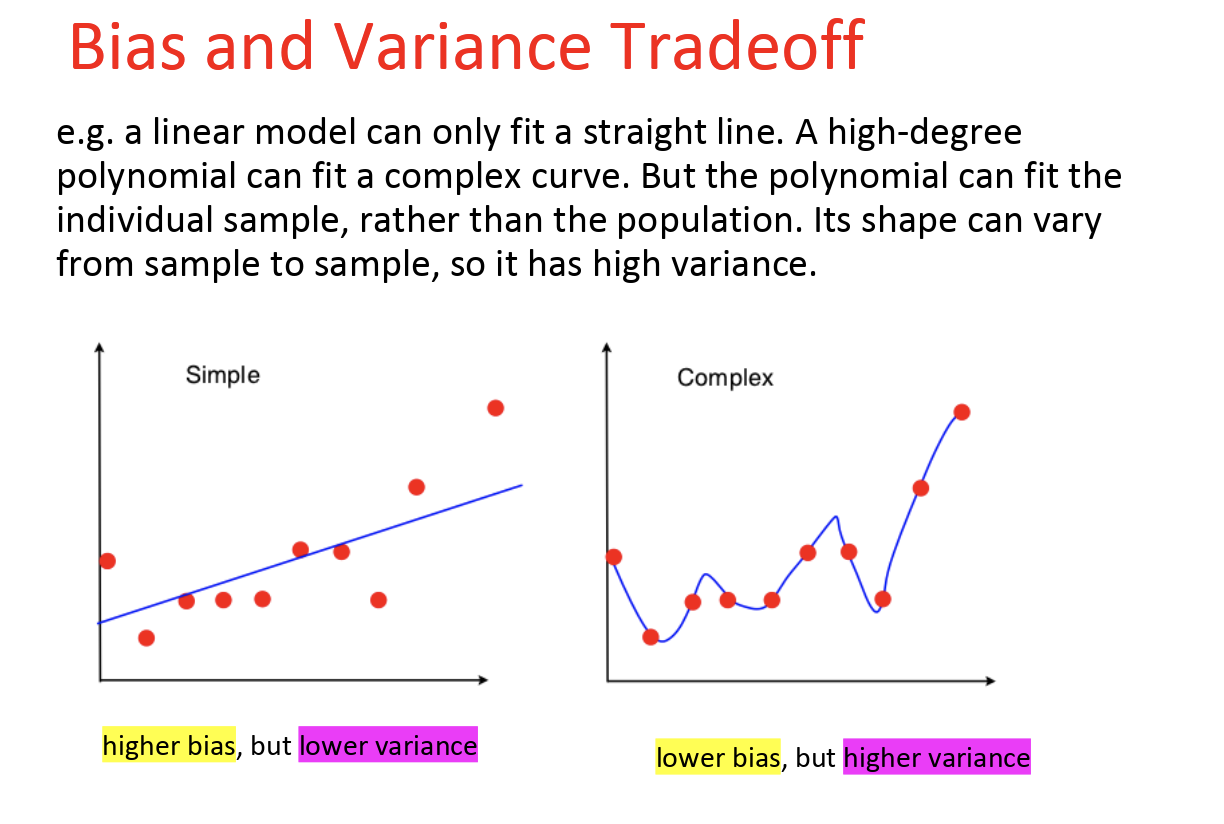

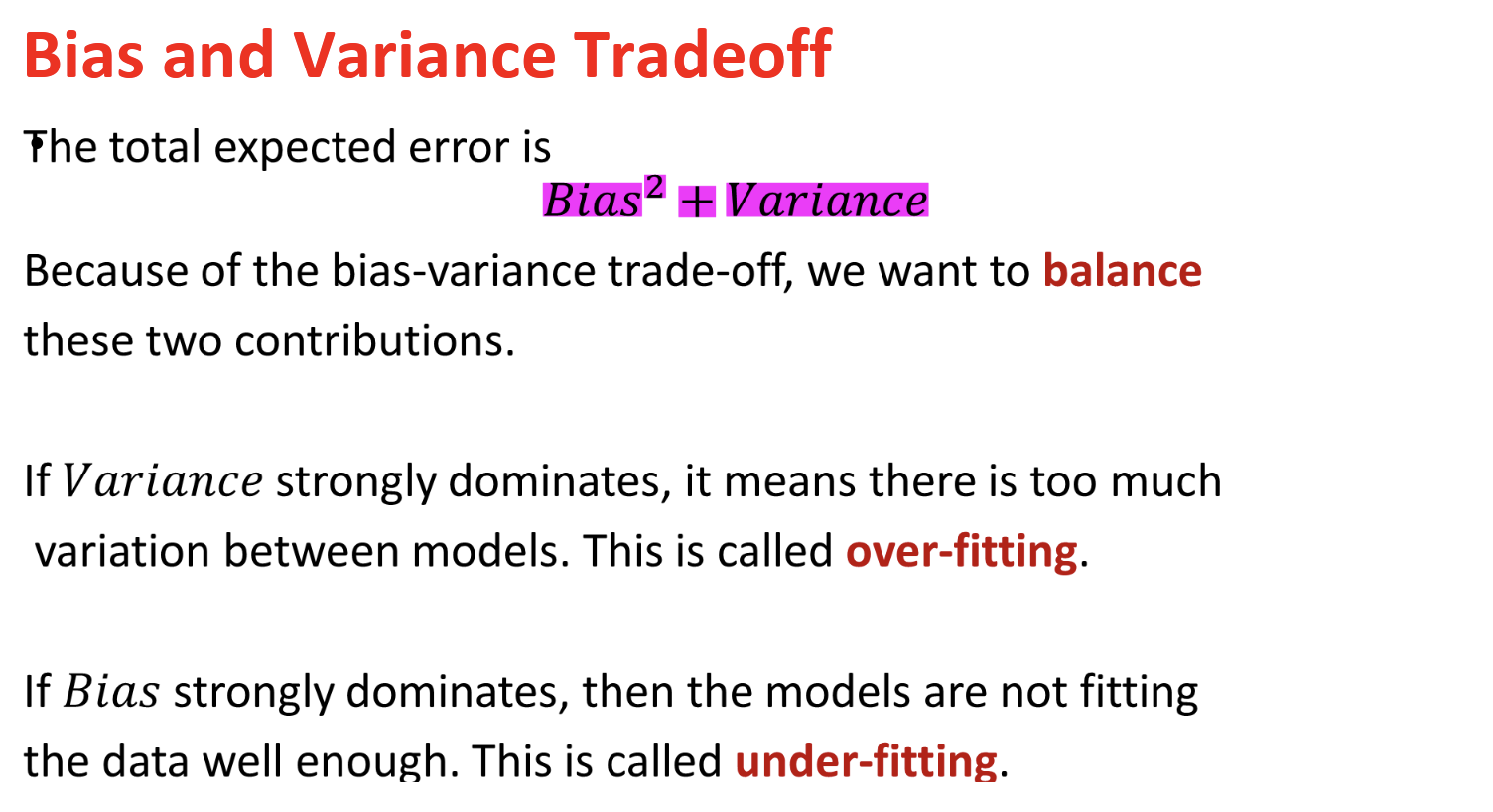

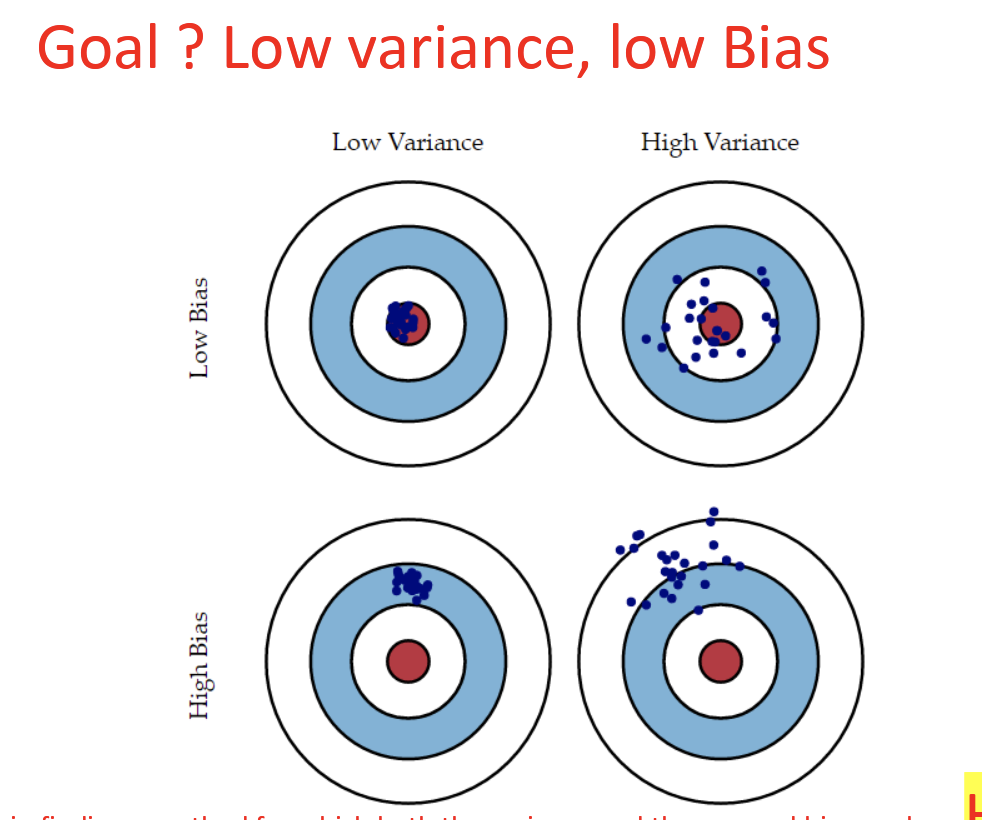

Mastering the trade-off between bias and variance is necessary to become a data science champion!

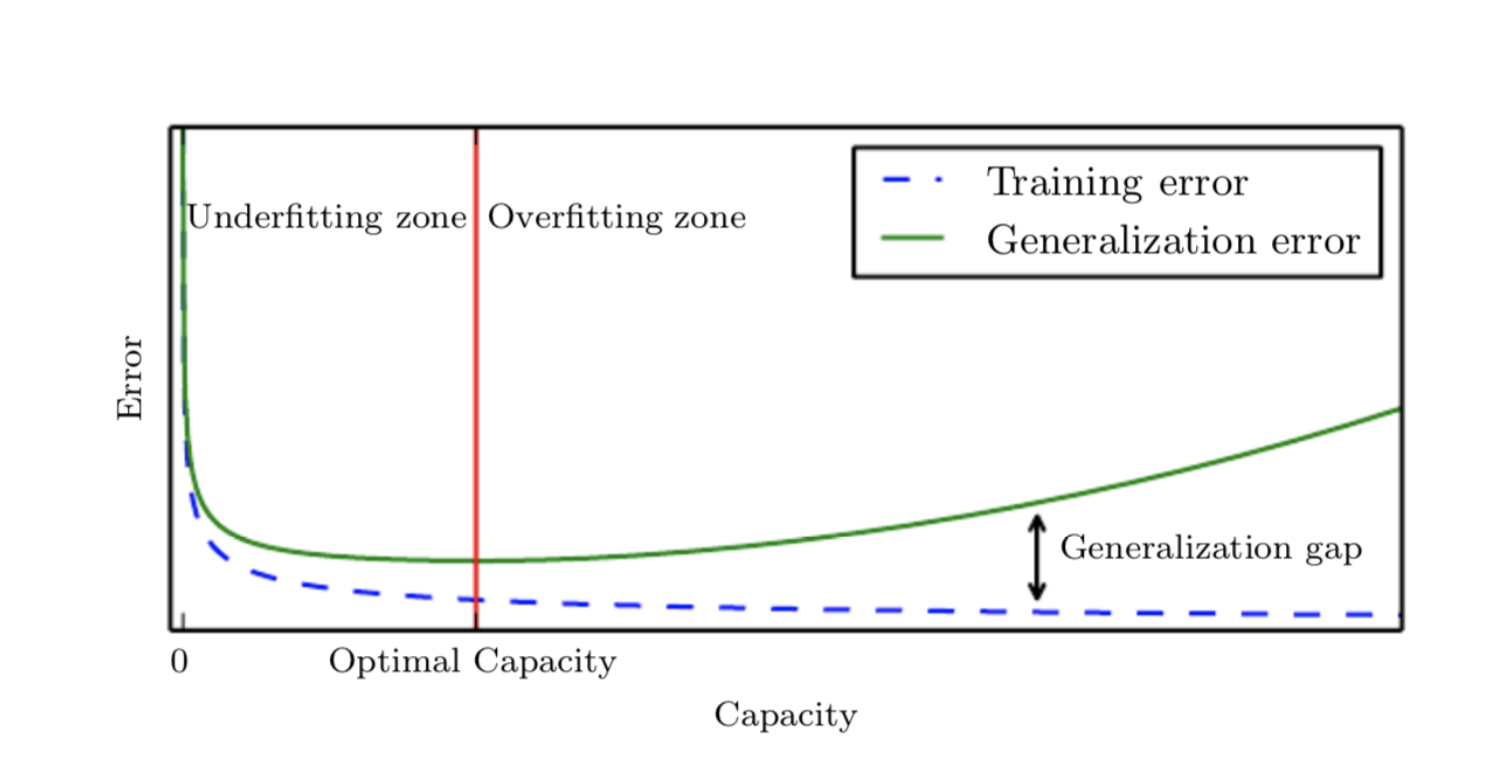

There is usually a bias-variance trade-off caused by model complexity.

Complex models (many parameters) usually have lower bias but higher variance.

Simple models (few parameters) usually have a higher bias, but lower variance.

Problem with Linear Regression

Multicollinearity can increase the variance of the coefficient estimates and make the estimates very sensitive to minor changes in the model.

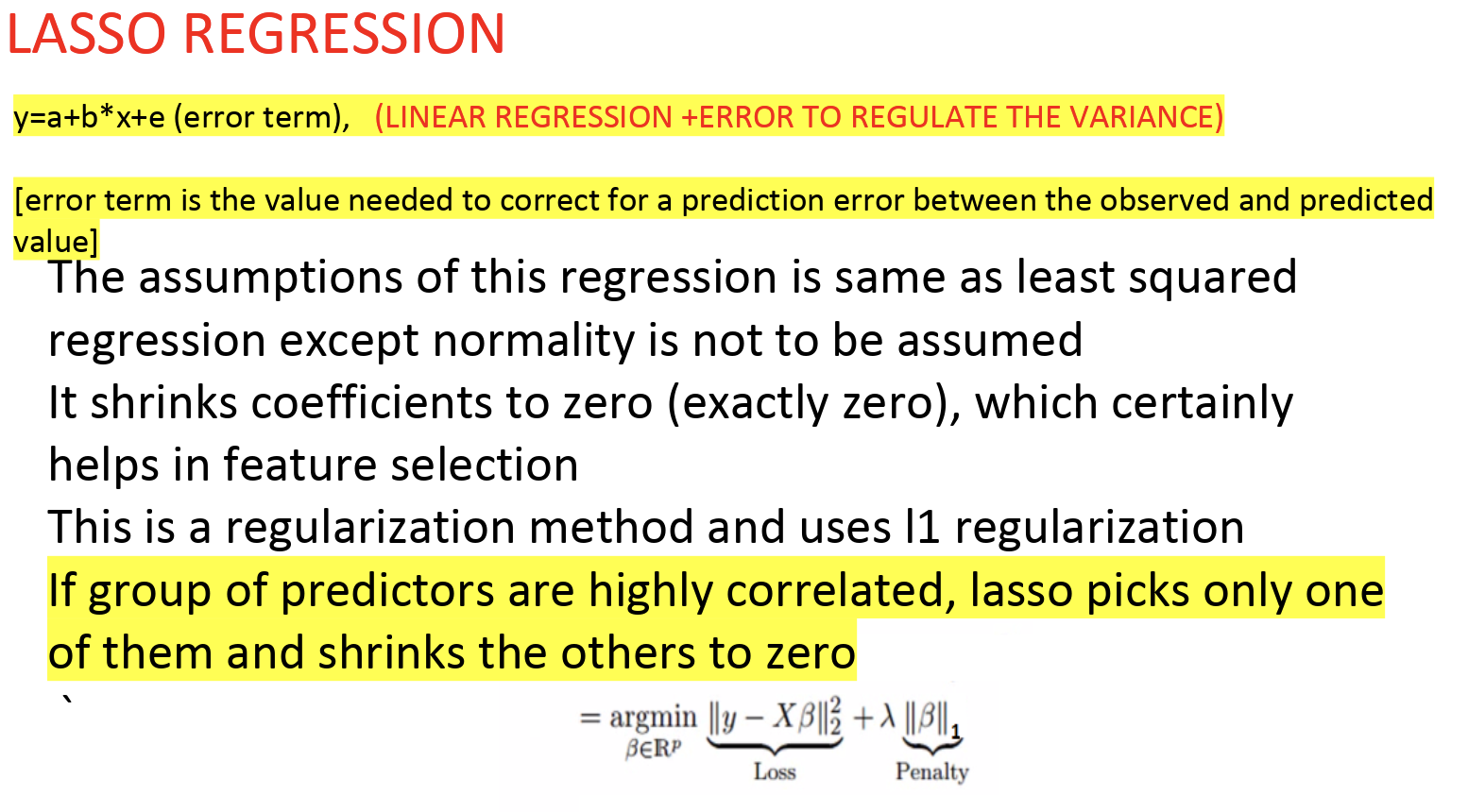

Ridge and Lasso Regression

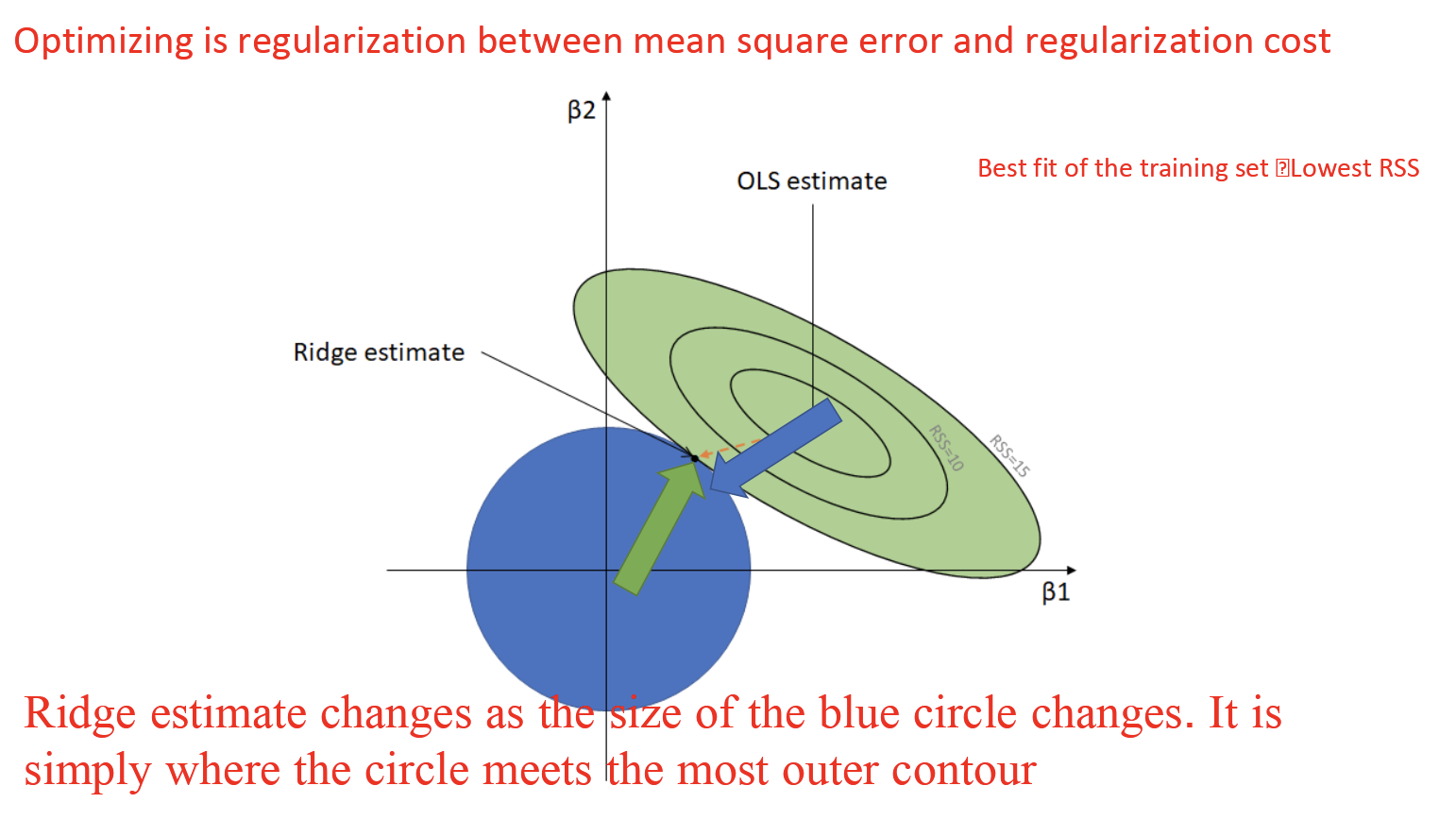

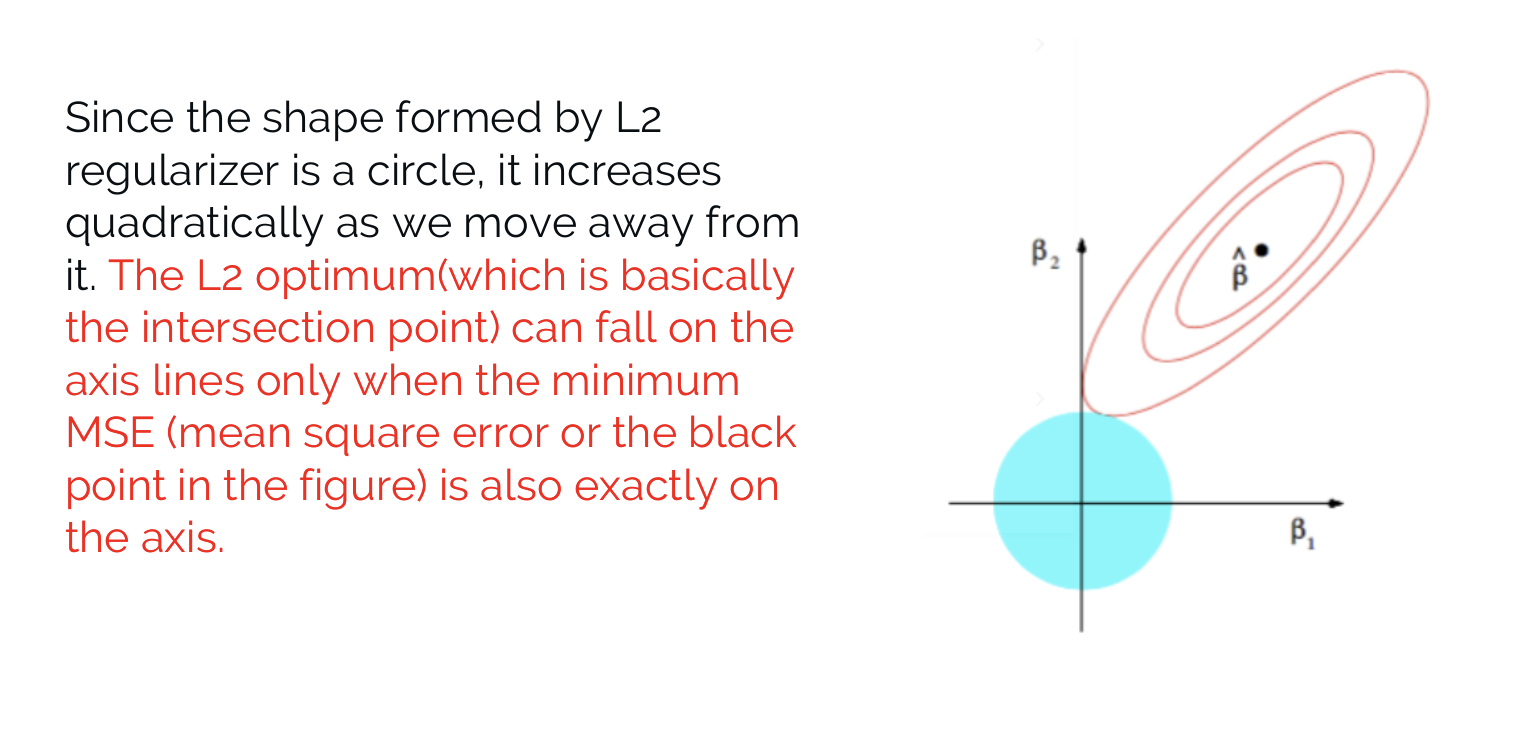

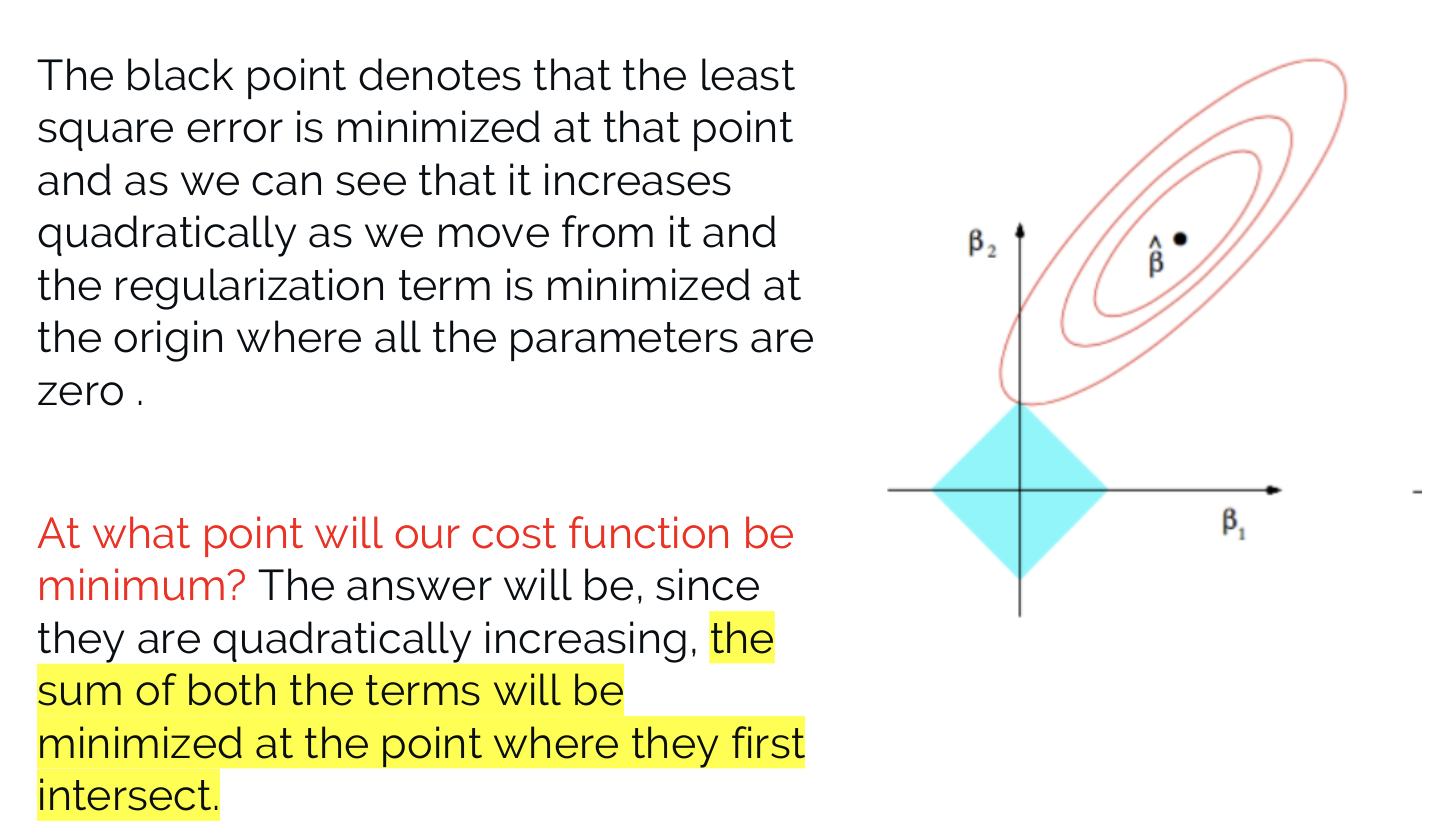

Ridge regression is a technique used when the data suffers from multicollinearity (independent variables are highly correlated)

In multicollinearity, even though the least-squares estimates are unbiased, their variances are high which deviates the observed values from the true value.

By adding a degree of bias(PENALTY/SHRINKAGE) to the regression estimates, ridge regression reduces the standard errors.