25 Naive bayes

The Naive Bayes algorithm comes from a generative model. There is an important distinction between generative and discriminative models.

Bayes Classifier

A probabilistic framework for solving classification problems

A, C random variables

Joint probability: Pr(A=a,C=c)

Conditional probability: Pr(C=c | A=a)

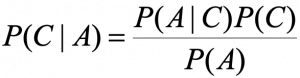

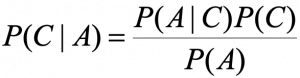

Relationship between joint and conditional probability distributions

![]()

Bayes Theorem

Naive Bayes Theorem Use Cases

Naive Bayes is great for very high dimensional problems because it makes a very strong assumption. Very high dimensional problems suffer from the curse of dimensionality – it’s difficult to understand what’s going on in a high dimensional space without tons of data. Example: Constructing a spam filter.

Example:

Given:

A doctor knows that meningitis causes stiff neck 50% of the time

Prior probability of any patient having meningitis is 1/50,000

Prior probability of any patient having stiff neck is 1/20

If a patient has stiff neck, what’s the probability he/she has meningitis?

Example 2:

Given a new instance, predict its label

x’=(Outlook=Sunny, Temperature=Cool, Humidity=High, Wind=Strong)

P(Outlook=Sunny|Play=Yes) = 2/9

P(Temperature=Cool|Play=Yes) = 3/9

P(Huminity=High|Play=Yes) = 3/9

P(Wind=Strong|Play=Yes) = 3/9

P(Play=Yes) = 9/14

P(Outlook=Sunny|Play=No) = 3/5

P(Temperature=Cool|Play==No) = 1/5

P(Huminity=High|Play=No) = 4/5

P(Wind=Strong|Play=No) = 3/5

P(Play=No) = 5/14

Let’s start:

P(Yes|x’) ≈ [P(Sunny|Yes)P(Cool|Yes)P(High|Yes)P(Strong|Yes)]P(Play=Yes) = 0.0053

P(No|x’) ≈ [P(Sunny|No) P(Cool|No)P(High|No)P(Strong|No)]P(Play=No) = 0.0206

Given the fact P(Yes|x’) < P(No|x’), we label x’ to be “No”.

Problem: Players will play if weather is sunny. Is this statement is correct?

How Naive Bayes algorithm works?

Python 3 Example: Please click here to see the Python3 Naive Bayes Example.