5 The Gibbs-Boltzmann distribution. The Maxwell distribution of velocities.

In the preceding Chapter, we connected a dynamical description of matter at the molecular level with macroscopic observables, such as pressure and temperature, no small feat. Furthermore, we were able to make predictions about the time evolution of the system. To make the connection, we applied notions of statistics. We have assumed that there is a steady state ensemble of configurations (or “microstates”) the system will continue to revisit at a steady rate, on the average, and in no particular order. (The rate may be extremely small, but it should be non-vanishing. If a microstate has been visited once, it should be visited again and again in perpetuity.) The assumption of such recurrent sampling of all available configurations in random order—sometimes referred to as the “ergodic hypothesis”—is not innocent; its validity must be decided on a case to case basis. When the assumption does hold, one can say that the system can achieve equilibrium. Equilibrium is something that may take some time to establish after the system was prepared since there is no guarantee that the system was prepared in a typical configuration. This is analogous to the so called “beginners’ luck” in gambling, which typically runs out as the gambler continues to play. With these provisos in mind, let us now extend our treatment from nearly non-interacting gases to systems where particles actually interact; in the vast majority of systems of practical interest, interactions are present and are significant. We will continue to assume that equilibrium can be, in fact, established.

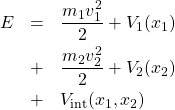

First off, what is “interaction”? In dynamical terms, interaction between two objects means that one object imposes some constraints on the motion of the other object. Consider the following energy function for two degrees of freedom:

(1)

where ![]() and

and ![]() are the velocities corresponding to the degrees of freedom 1 and 2, respectively.

are the velocities corresponding to the degrees of freedom 1 and 2, respectively.

The top line on the r.h.s. contains terms that depend exclusively on the coordinate and velocity of particle 1. The middle line contains terms that depend exclusively on the coordinate and velocity of particle 2. In contrast, the bottom line contains a function that depends on the configuration of both particles. The full potential energy is, then, a sum of three terms:

(2) ![]()

By construction, the term ![]() can not be decomposed into a sum of some function that depends solely on

can not be decomposed into a sum of some function that depends solely on ![]() and some function that depends solely on

and some function that depends solely on ![]() :

:

(3) ![]()

Clearly, then, the force acting on object 1 is modified by the presence of object 2, because differentiation with respect to ![]() does not rid us from the variable

does not rid us from the variable ![]() in the expression for the force acting on particle 1:

in the expression for the force acting on particle 1:

(4) ![]()

which is clearly a function of both ![]() and

and ![]() . Indeed, suppose, to the contrary, that

. Indeed, suppose, to the contrary, that ![]() is not a function of

is not a function of ![]() , thus implying

, thus implying ![]() , where

, where ![]() is some function of

is some function of ![]() but not

but not ![]() . Integrating this equation with respect to

. Integrating this equation with respect to ![]() yields

yields ![]() , where

, where ![]() is

is ![]() independent. (

independent. (![]() could be a function of

could be a function of ![]() .) But this kind of additive form is expressly forbidden by the condition (3), thus proving that for non-additive potentials, object 2 exerts a force on object 1. By the same token, the force acting upon object 2 will depend on

.) But this kind of additive form is expressly forbidden by the condition (3), thus proving that for non-additive potentials, object 2 exerts a force on object 1. By the same token, the force acting upon object 2 will depend on ![]() as well. The presence of a term

as well. The presence of a term ![]() of the kind satisfying condition (3), in the full energy function, thus means the degrees of freedom in question are interacting, or “coupled”.

of the kind satisfying condition (3), in the full energy function, thus means the degrees of freedom in question are interacting, or “coupled”.

More generally, we can state that a set of degrees of freedom are non-interacting, if the full energy function is additive, i.e., it can be decomposed into a sum of energy functions each pertaining to an individual degree of freedom and there are no “cross-terms” that couple the degrees of freedom:

(5) ![]()

where ![]() is the energy function for the degree of freedom

is the energy function for the degree of freedom ![]() .

.

The above notions on the additivity of energies of independent degrees of freedom have two very important implications:

- Energy can be used as an indicator for whether interactions have actually occurred. Indeed, we saw that interactions change the state of the motion of the system—sometimes referred to as the “microstate”—since they amount to a non-vanishing force, by Eq. (4). Thus changes in the value of the energy can be used to bookkeep the microstates of a system. Indeed, two distinct values of energy necessarily correspond to two distinct microstates. The converse is not necessarily true, however. Furthermore, a great deal of ambiguity arises when two or more distinct microstates are degenerate, i.e, have the same value of energy. This ambiguity is thus intrinsically built into a bookkeeping scheme based on energies and, as we will see later, is impossible to avoid in practice. Fortunately, this complication can (and will) be dealt with and has colossal significance in applications.

- The lack of mutual correlation between two degrees of freedom, whose energies simply add to make up the total energy, allows one to explicitly determine the functional form of the dependence of the probability

of microstate

of microstate  on the energy

on the energy  of that microstate. This is because probabilities of independent events multiply, as we saw earlier.

of that microstate. This is because probabilities of independent events multiply, as we saw earlier.

Let’s work this out mathematically. Since we have agreed that the energy is the only quantity we will keep track of, the probability of microstate ![]() is determined exclusively by its energy

is determined exclusively by its energy ![]() :

:

(6) ![]()

where ![]() is a specific but unknown, as of yet, function, and the quantity

is a specific but unknown, as of yet, function, and the quantity

(7) ![]()

is a normalization factor ensuring our probabilities are normalized:

(8) ![]()

which formally reflects the notion that the system is in some microstate with probability one.

As written in Eq. (6), the definition of the function ![]() is still ambiguous. Indeed, if we multiplied all of the

is still ambiguous. Indeed, if we multiplied all of the ![]() ‘s by a fixed number, the value of the weights

‘s by a fixed number, the value of the weights ![]() would not change since

would not change since ![]() would also be multiplied by the same fixed number, by Eq. (7). To remove this ambiguity, we impose an additional condition that the quantity

would also be multiplied by the same fixed number, by Eq. (7). To remove this ambiguity, we impose an additional condition that the quantity ![]() be equal to the total number of accessible microstates, subject to some constraints of choice. (Common constraints include things like fixing the temperature and/or volume of the system, or maintaining a certain value of pressure and particle number, etc.) The (as of yet unknown function)

be equal to the total number of accessible microstates, subject to some constraints of choice. (Common constraints include things like fixing the temperature and/or volume of the system, or maintaining a certain value of pressure and particle number, etc.) The (as of yet unknown function) ![]() , then, can be thought of as the contribution of state

, then, can be thought of as the contribution of state ![]() to the total number of thermally available states. The number of accessible states has a spooky property that it does not have to be integer, in seeming conflict with the expectation that the number of configurations can obtained by ordering them in some way and then counting them using ordinal numbers. Likewise, the contribution

to the total number of thermally available states. The number of accessible states has a spooky property that it does not have to be integer, in seeming conflict with the expectation that the number of configurations can obtained by ordering them in some way and then counting them using ordinal numbers. Likewise, the contribution ![]() of state

of state ![]() to the number of available states can be less than one! I will illustrate how this apparent lack of ordinality comes about using an example. Suppose you live in a 3-story home and your friend lives in a 5-story home; each story has exactly one room. Clearly you can be in one room at a time, and so there are 3 distinct configurations when you are home. Likewise, your friend has 5 distinct configurations, and the compound you+friend system has

to the number of available states can be less than one! I will illustrate how this apparent lack of ordinality comes about using an example. Suppose you live in a 3-story home and your friend lives in a 5-story home; each story has exactly one room. Clearly you can be in one room at a time, and so there are 3 distinct configurations when you are home. Likewise, your friend has 5 distinct configurations, and the compound you+friend system has ![]() distinct configurations. Indeed, for each of your 3 configurations, your friend has 5. Now suppose you really don’t like going upstairs much because your wi-fi router is on the ground floor and the signal quality deteriorates as your go upstairs. (This is your constraint.) As a result, you spend say, 60% of your time on floor 1, 30% on floor 2, and the remaining 10% on floor 3. Being the likeliest one, the 1st floor configuration is counted as unconditionally accessible and so contributes exactly 1 to the total number of accessible configurations. The 2nd floor configuration contributes only

distinct configurations. Indeed, for each of your 3 configurations, your friend has 5. Now suppose you really don’t like going upstairs much because your wi-fi router is on the ground floor and the signal quality deteriorates as your go upstairs. (This is your constraint.) As a result, you spend say, 60% of your time on floor 1, 30% on floor 2, and the remaining 10% on floor 3. Being the likeliest one, the 1st floor configuration is counted as unconditionally accessible and so contributes exactly 1 to the total number of accessible configurations. The 2nd floor configuration contributes only ![]() , and the 3rd floor configuration contributes only

, and the 3rd floor configuration contributes only ![]() . The resulting accessible number of configurations is, then,

. The resulting accessible number of configurations is, then, ![]() . It is substantially less than the number of configurations that would be accessible without any constraints, i.e., 3. This type of reasoning may strike you as odd, so let’s look at a simpler yet more extreme example. Suppose you have a fair coin. Clearly there are exactly two distinct configurations: heads and tail. Correct? Now suppose the coin is slightly unfair, say, the odds of heads vs. tails are 5 vs. 4. How many accessible configurations does that correspond to? To drive home the point that this number is less than 2, imagine instead that the odds are grotesquely skewed toward heads, say,

. It is substantially less than the number of configurations that would be accessible without any constraints, i.e., 3. This type of reasoning may strike you as odd, so let’s look at a simpler yet more extreme example. Suppose you have a fair coin. Clearly there are exactly two distinct configurations: heads and tail. Correct? Now suppose the coin is slightly unfair, say, the odds of heads vs. tails are 5 vs. 4. How many accessible configurations does that correspond to? To drive home the point that this number is less than 2, imagine instead that the odds are grotesquely skewed toward heads, say, ![]() vs.

vs. ![]() . Nobody in their right mind would bet even a penny on the possibility of observing tails, since

. Nobody in their right mind would bet even a penny on the possibility of observing tails, since ![]() is equivalent to zero for all intents and purposes. This means that the accessible number of states for this grossly unfair coin is just one, the accessible state being the heads. The number of accessible states for the slightly unfair coin, in a sense, interpolates between the fair coin and the grossly unfair coin, yielding:

is equivalent to zero for all intents and purposes. This means that the accessible number of states for this grossly unfair coin is just one, the accessible state being the heads. The number of accessible states for the slightly unfair coin, in a sense, interpolates between the fair coin and the grossly unfair coin, yielding: ![]() . Now suppose your friend has a similar problem with her wi-fi in her house and her odds of being on floors 1 through 5 are, respectively: 40%, 30%, 20%, 7%, and 3%. The resulting number of accessible states is, then

. Now suppose your friend has a similar problem with her wi-fi in her house and her odds of being on floors 1 through 5 are, respectively: 40%, 30%, 20%, 7%, and 3%. The resulting number of accessible states is, then ![]() . And what is the total number of accessible states for the compound you+friend system? It is

. And what is the total number of accessible states for the compound you+friend system? It is ![]() , well below the 15 states that would be accessible without the constraints.

, well below the 15 states that would be accessible without the constraints.

Now consider a general compound system consisting of two non-interacting subsystems, 1 and 2, and label their microstates with indices ![]() and

and ![]() , respectively. The probability of microstate

, respectively. The probability of microstate ![]() , whose energy is

, whose energy is ![]() , is, then

, is, then

(9) ![]()

Likewise, the probability of microstate ![]() , whose energy is

, whose energy is ![]() for system 2 is

for system 2 is

(10) ![]()

![]() is the number of accessible states for system 1 and

is the number of accessible states for system 1 and ![]() for system 2.

for system 2.

On the one hand, the probability to observe states ![]() and

and ![]() simultaneously is given by the product of the probabilities of the standalone probabilities, as we discussed in the preceding Chapter:

simultaneously is given by the product of the probabilities of the standalone probabilities, as we discussed in the preceding Chapter:

(11) ![]()

One the other hand, Eq. (6) tells us the probability of the ![]() configuration is

configuration is

(12) ![]()

because the energy of the combined system is simply ![]() . Furthermore, the total number of accessible states is determined by multiplying those numbers for the standalone systems 1 and 2, as we discussed above:

. Furthermore, the total number of accessible states is determined by multiplying those numbers for the standalone systems 1 and 2, as we discussed above:

(13) ![]()

Putting together Eqs. (28)-(13) yields

(14) ![]()

This equation applies for any values of the two quantities ![]() and

and ![]() and, thus, defines a functional equation for the (yet unknown) function

and, thus, defines a functional equation for the (yet unknown) function ![]() :

:

(15) ![]()

Functional equations generally do not have a unique solution and are often hard to solve. But, in this particular case, we are in luck since the solution is not hard to find and is sufficiently unique for our purposes. First, set ![]() in Eq. (15) to convince yourself that

in Eq. (15) to convince yourself that

(16) ![]()

Next, differentiate Eq. (15) with respect to ![]() , where one must use the chain rule for the l.h.s.:

, where one must use the chain rule for the l.h.s.: ![]() , where the prime means the derivative with respect to the argument of the function:

, where the prime means the derivative with respect to the argument of the function:

(17) ![]()

And so one gets

(18) ![]()

Now again set ![]() to obtain

to obtain

(19) ![]()

where defined the constant ![]() to equal the negative derivative of

to equal the negative derivative of ![]() at the origin:

at the origin:

(20) ![]()

The minus sign is artificial but will make life easier in the future. Eq. (19) is easily solved by separating the variables and integrating: ![]() , where the constant is fixed at

, where the constant is fixed at ![]() , in view of Eq. (16). As a result, we obtain a remarkably simple expression for the function

, in view of Eq. (16). As a result, we obtain a remarkably simple expression for the function ![]() :

:

(21) ![]()

We are left with determining the coefficient ![]() . Consider a particle freely moving in 1D (“moving freely” = “not subjected to an external force”):

. Consider a particle freely moving in 1D (“moving freely” = “not subjected to an external force”): ![]() , where I used the subscript

, where I used the subscript ![]() to emphasize that the motion is along one spatial direction, chosen to be

to emphasize that the motion is along one spatial direction, chosen to be ![]() for concreteness. Distinct microstates of the particles differ only by their velocities and, in fact, are uniquely labelled by the corresponding value of

for concreteness. Distinct microstates of the particles differ only by their velocities and, in fact, are uniquely labelled by the corresponding value of ![]() itself. Hence,

itself. Hence,

(22) ![]()

where the ![]() -independent proportionality constant can be determined by requiring that the distribution be normalized to 1:

-independent proportionality constant can be determined by requiring that the distribution be normalized to 1: ![]() . (The lack of dependence of the constant

. (The lack of dependence of the constant ![]() on the velocity is, perhaps, best appreciated in Quantum Mechanics, through the concept of “the phase space”, but see below, where we discuss the velocity distribution in 3D.) This yields, after consulting the table of definite integrals:

on the velocity is, perhaps, best appreciated in Quantum Mechanics, through the concept of “the phase space”, but see below, where we discuss the velocity distribution in 3D.) This yields, after consulting the table of definite integrals: ![]() :

:

(23) ![]()

Next we compute the average of the velocity squared:

(24) ![]()

On the other hand, in the preceding Chapter we defined the temperature according to ![]() . This immediately yields:

. This immediately yields:

(25) ![]()

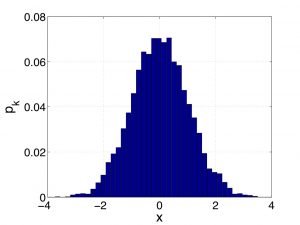

As a result we may write, for the probability of state ![]() , what is called the Gibbs-Boltzmann distribution:

, what is called the Gibbs-Boltzmann distribution:

(26) ![]()

where the number of accessible states:

(27) ![]()

is sometimes called the partition function, because it shows how the overall number of accessible states is partitioned among distinct microstates.

Eq. (26), together with Eqs. (25) and (27) of course, is arguably the most important result of Thermodynamics and Statistical Mechanics! The velocity distribution in Eq. (23) is also quite important in its own right. It is called the Maxwell distribution of velocities.

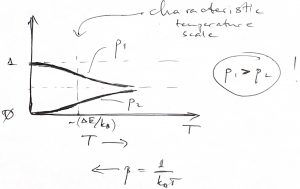

It is worthwhile to consider the simplest non-trivial arrangement where there two exactly two states. Such a two-level system is a good model for the electronic or nuclear spin 1/2 in a magnetic field. The two microstates correspond to the spin up and down. The lowest energy state is called the ground state. States at higher energies are called excited states. There is only one excited state in a two-level system, of course.

(28) ![]()

and

(29) ![]()

Note ![]() , as it should, and that the probabilities depend only on energy differences, not the absolute values of energies. This is consistent with our earlier realization that only increments of the potential energy matter, not its absolute value. In other words, observable quantities should be independent of the energy reference. (Note forces are observable, energy is not!)

, as it should, and that the probabilities depend only on energy differences, not the absolute values of energies. This is consistent with our earlier realization that only increments of the potential energy matter, not its absolute value. In other words, observable quantities should be independent of the energy reference. (Note forces are observable, energy is not!)

We next sketch the two weights ![]() and

and ![]() .

.

We note that ![]() always, i.e., the ground state of the two-level system is always likelier to be occupied than the excited state. Only in the limit of infinite temperature,

always, i.e., the ground state of the two-level system is always likelier to be occupied than the excited state. Only in the limit of infinite temperature, ![]() ,

, ![]() , do the two states become equally likely. Incidentally, this is the limit where the number of accessible states,

, do the two states become equally likely. Incidentally, this is the limit where the number of accessible states, ![]() , approaches its largest possible value of 2.

, approaches its largest possible value of 2.

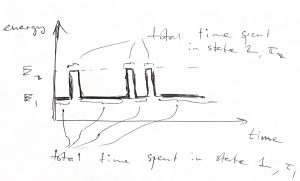

Let’s review the meaning of the word “probability” in a practical context. One way to connect the weights ![]() to experiment is that given a (large) number

to experiment is that given a (large) number ![]() of identical systems, we expect that at any given time:

of identical systems, we expect that at any given time: ![]() will be in state 1, while

will be in state 1, while ![]() will be in state 2. Hereby,

will be in state 2. Hereby,

(30) ![]()

(31) ![]()

,

consistent with Eqs. (28) and (29). This is an example of ensemble averaging. Here you are looking at many equivalent systems at the same time.

Alternatively, one can determine the odds for a two-level system to be in state 1 or 2 by bookkeeping the amount time just one such system spent in those states. Here you monitor one system over a long time, this is called time averaging:

![]()

![]()

Various quantities of interest can be determined using the statistical weights ![]() . For instance, the expectation (or average, or mean) value of the energy is

. For instance, the expectation (or average, or mean) value of the energy is

(32) ![]()

Note the expectation value of energy is never equal to its instantaneous value—i.e. the energy of either microstate—but, instead, interpolates between ![]() and

and ![]() . Note also that because

. Note also that because ![]() , the largest energy the system can have is

, the largest energy the system can have is ![]() .

.

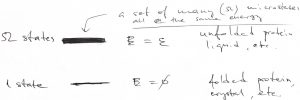

The only way to raise the expectation value of the energy is to employ more excited states. Consider, then, a more complicated example, where there is still just one ground state, at energy ![]() , but there are

, but there are ![]() excited states, each at energy

excited states, each at energy ![]() . The quantity

. The quantity ![]() is often referred to as the degeneracy of the excited state. One must be careful and remembering that there are actually

is often referred to as the degeneracy of the excited state. One must be careful and remembering that there are actually ![]() distinct excited states.

distinct excited states.

As before, the probability to be in a particular state is determined by the Boltzmann-Gibbs distribution

(33) ![]()

while the partition function ![]() is given by

is given by

(34) ![]()

We number the states starting from the ground state and use that ![]() and

and ![]() for

for ![]() , by construction.

, by construction.

The expectation value of the energy is now:

(35) ![]()

where we wrote out explicitly the ground and excited state energies ![]() and

and ![]() to drive home that the question of the probability to have a certain amount of energy is generally very different from the question of the probability to be in a microstate with that energy.

to drive home that the question of the probability to have a certain amount of energy is generally very different from the question of the probability to be in a microstate with that energy.

Indeed, the probability to have energy ![]() is

is

(36)

and can be made as close to one as we want by making the degeneracy ![]() larger.

larger.

It may be simple, but this model is not a bad approximation for the energy landscape of a protein molecule. Here the ground, lowest energy state would correspond to the protein molecule folded in its native state. Each of the unfolded states is significantly higher in energy than the native state and, individually, have no chance against the native state. When considered together, however, the unfolded states can take over given a high enough temperature. Indeed the probability to be unfolded relative to being folded is given by ratio:

(37) ![]()

At the special value of temperature, called the folding temperature ![]() , this ratio is equal to one implying the protein molecule is equally likely to folded or unfolded. Thus,

, this ratio is equal to one implying the protein molecule is equally likely to folded or unfolded. Thus,

(38) ![]()

Can you see that at ![]() , the

, the ![]() is greater than one, implying the protein molecule is likely unfolded? And vice versa for

is greater than one, implying the protein molecule is likely unfolded? And vice versa for ![]() . Your homework problems highlight just how readily the equilibrium is shifted toward the folded state or the unfolded states as the temperature is moved away from

. Your homework problems highlight just how readily the equilibrium is shifted toward the folded state or the unfolded states as the temperature is moved away from ![]() . In any event, increasing the multiplicity of the unfolded states clearly decreases the folding temperature. This makes sense, doesn’t it?

. In any event, increasing the multiplicity of the unfolded states clearly decreases the folding temperature. This makes sense, doesn’t it?

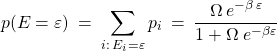

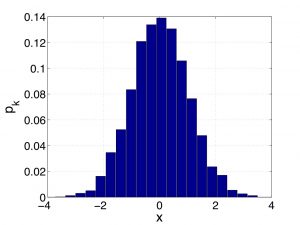

It is now time for another Digression on Statistics: We saw just moments ago that in some cases, a variable that one can use to label distinct microstates is a truly continuous variable, such as the velocity in Eq. (23). This does not prevent one from histogramming this variable, of course, but it may now be practical to use a histogram where the width of the bar is arbitrarily narrow. One reason to do this is that if in the limit of a vanishingly narrow histogram bin, the histogram becomes smooth and, hence, becomes amenable to a variety of mathematical operations, such as differentiation. Integration becomes much easier, too. One problem with shrinking the bin size is that the number of data points decreases, too, roughly proportionally with the bin size. Indeed, now there are fewer data points per bin. Below I show two sets of the weights ![]() for the same data set. The two probability distributions correspond to two different values of the bin width:

for the same data set. The two probability distributions correspond to two different values of the bin width:

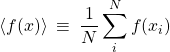

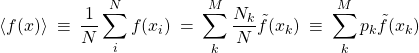

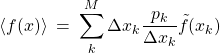

Clearly, in the limit of the vanishing bin size, the weights will vanish, too, at the same rate as the bin size does. To handle this, let us remind ourselves the definition of the average of some function ![]() over a set of data points:

over a set of data points:

(39)

When the number ![]() of data is large, we may benefit from grouping the data into

of data is large, we may benefit from grouping the data into ![]() bins, just like we did it Chapter 2:

bins, just like we did it Chapter 2:

(40)

where the index ![]() labels bins and

labels bins and ![]() is the value of

is the value of ![]() averaged over bin

averaged over bin ![]() . The weight

. The weight ![]() thus gives the partial quantity of the data falling within bin

thus gives the partial quantity of the data falling within bin ![]() , as before.

, as before.

(41)

where where ![]() is the width of bin

is the width of bin ![]() . Since the weight

. Since the weight ![]() scales linearly with

scales linearly with ![]() , the ratio

, the ratio ![]() remains finite in the

remains finite in the ![]() limit and we are justified in defining the following function:

limit and we are justified in defining the following function:

(42) ![]()

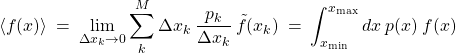

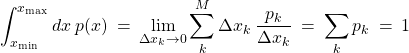

called the probability density. It is the continuous analog of the discrete distribution function ![]() . With this definition, the average in Eq. (43) can be rewritten as a definite integral:

. With this definition, the average in Eq. (43) can be rewritten as a definite integral:

(43)

,

where ![]() and

and ![]() delineate the range of the variable

delineate the range of the variable ![]() . According to its definition (42), the function

. According to its definition (42), the function ![]() is the number of points per unit interval, times the total number of data

is the number of points per unit interval, times the total number of data ![]() . Accordingly, the quantity

. Accordingly, the quantity ![]() has dimensions of inverse

has dimensions of inverse ![]() . In this sense, it is a probability density. Accordingly, when we average over the distribution, we integrate, not sum. Note that the probability density

. In this sense, it is a probability density. Accordingly, when we average over the distribution, we integrate, not sum. Note that the probability density ![]() is normalized to one, if the parent distribution

is normalized to one, if the parent distribution ![]() is:

is:

(44)

One may say that the quantity ![]() gives the partial quantity of data falling within the (infinitesimal) interval

gives the partial quantity of data falling within the (infinitesimal) interval ![]() centered at

centered at ![]() . One may also say that the function

. One may also say that the function ![]() is the inverse of the typical spacing between the data points

is the inverse of the typical spacing between the data points ![]() , times the total number of data

, times the total number of data ![]() .

.

Because the quantity ![]() vanishes for an infinitely narrow interval

vanishes for an infinitely narrow interval ![]() , one may say that the probability to for the variable

, one may say that the probability to for the variable ![]() to have a precise value, say

to have a precise value, say ![]() , is strictly zero. However, it is better to remember that the question of the probability to have a specific value is not well posed. When we have continuous distributions, we, instead ask questions like this: What is the probability for the variable

, is strictly zero. However, it is better to remember that the question of the probability to have a specific value is not well posed. When we have continuous distributions, we, instead ask questions like this: What is the probability for the variable ![]() to be contained with an interval, say,

to be contained with an interval, say, ![]() . This kind of question is answered by computing an integral:

. This kind of question is answered by computing an integral:

(45) ![]()

which could be thought of as counting all of the data within the ![]() of a histogram. As an example of using Eq. (45), consider finding the median

of a histogram. As an example of using Eq. (45), consider finding the median ![]() of a distribution. It is simply the value of the variable that splits the distribution exactly in two equal parts:

of a distribution. It is simply the value of the variable that splits the distribution exactly in two equal parts:

(46) ![]()

the last equation valid for distributions that are normalized to one. Although the median and the average of a distribution often follow each other, the median is sometimes preferable to the average, especially when the average does not even exist (![]() ). The latter situations will not be encountered in this Course, but they do arise when the distribution in question has very long tails, i.e., the probability density decays to zero slowly. (It must to decay to zero eventually, or, else, it would not be normalizable.) Working with the median is often more convenient also when, for instance, the distribution is bimodal so that the values of the variable that are numerically close to the average of the distribution are not particularly representative of the distribution.

). The latter situations will not be encountered in this Course, but they do arise when the distribution in question has very long tails, i.e., the probability density decays to zero slowly. (It must to decay to zero eventually, or, else, it would not be normalizable.) Working with the median is often more convenient also when, for instance, the distribution is bimodal so that the values of the variable that are numerically close to the average of the distribution are not particularly representative of the distribution.

Similarly to the probability distribution for a single physical quantity, we can define a distribution density for more than one distributed variable at at time. For instance, one can define a two-variable probability density according to

(47) ![]()

One can then compute the average of any function of ![]() and

and ![]() by evaluating a double integral:

by evaluating a double integral:

(48) ![]()

where we did not write out the integration limits for typographical clarity. When both the averaged function and the distribution factorize, the above integration reduces to a product of two 1D integrals:

(49) ![Rendered by QuickLaTeX.com \begin{eqnarray*} \langle f_1(x) f_2(y) \rangle\:&=&\: \iint dx \, dy \: \: p_1(x) p_2(y) \:\: f_1(x) \, f_2(y) \\ \:&=&\: \left[ \int dx \: p_1(x) \: f_1(x) \right] \times \left[ \int dy \: p_2(y) \: f_2(y) \right] \end{eqnarray*}](https://uhlibraries.pressbooks.pub/app/uploads/quicklatex/quicklatex.com-38cf13f5f96ca6403ff0bd2f42bf714a_l3.png)

End of digression on Statistics.

The Gibbs-Boltzmann distribution is used to evaluate the probability of discretely distinct configurations (such as pregnant vs. not pregnant) and is not always straightforward to apply to situations where the variable labeling microscopic states seems to change continuously. This ambiguity is solved, more or less, in Quantum Mechanics. In the absence of a more rigorous treatment, we’ll try to get away with consistency-type reasoning. For instance, we can use the Gibbs-Boltzmann distribution to write down the probability distribution for the coordinate and velocity of a particle moving in 1D subject to the potential ![]() :

:

(50) ![]()

because the energy of the particle is ![]() . We can reasonably guess that the constant

. We can reasonably guess that the constant ![]() in front of the exponential must be independent of

in front of the exponential must be independent of ![]() . Indeed, shifting the potential over by length

. Indeed, shifting the potential over by length ![]() should simply shift the probability the distribution by the same length without multiplying it by some function of

should simply shift the probability the distribution by the same length without multiplying it by some function of ![]() . The lack of dependence of

. The lack of dependence of ![]() on

on ![]() is a bit trickier. Here it helps to note that velocity changes, due to a non-vanishing force, do not explicitly depend on the velocity itself, according to Newton’s 2nd law:

is a bit trickier. Here it helps to note that velocity changes, due to a non-vanishing force, do not explicitly depend on the velocity itself, according to Newton’s 2nd law: ![]() , see also below. In any event, Eq. (51) encodes the remarkable fact that in equilibrium, the coordinate and velocity are statistically independent! Indeed the distribution (51) factorizes into an

, see also below. In any event, Eq. (51) encodes the remarkable fact that in equilibrium, the coordinate and velocity are statistically independent! Indeed the distribution (51) factorizes into an ![]() and

and ![]() dependent part:

dependent part:

(51) ![]()

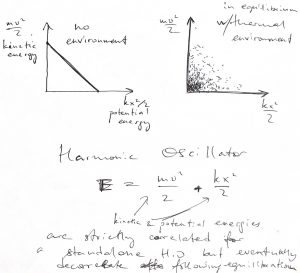

Consider, for instance, with the harmonic oscillator, whose kinetic and potential energies exactly anti-correlate in the absence of interaction with an environment. Once exposed to a thernal environment, the two energies will eventually decorrelated, the relaxation time depending on the efficiency of momentum transfer from the oscillator to the environment.

Now, the ![]() -dependent factor is sometimes called the Boltzmann distribution:

-dependent factor is sometimes called the Boltzmann distribution:

(52) ![]()

where ![]() is a normalization constant that has no

is a normalization constant that has no ![]() dependence.

dependence.

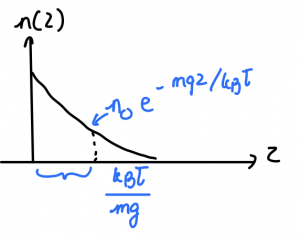

An important example is the potential of the Earth’s gravity as as a function of height ![]() , relatively to the ground:

, relatively to the ground:

(53) ![]()

It is easy to write down the resulting ![]() -dependence of the concentration of a gas because by its very meaning, the concentration of a gas at a specific location is proportional to the probability for a gas molecule to be at that location:

-dependence of the concentration of a gas because by its very meaning, the concentration of a gas at a specific location is proportional to the probability for a gas molecule to be at that location:

(54) ![]()

where ![]() is the concentration near the ground.

is the concentration near the ground.

We sketch this ![]() below:

below:

The exponential function decays very rapidly, the decay length in this particular case being determined by the quantity ![]() . Thus the thickness of the atmosphere is determined by the very same quantity

. Thus the thickness of the atmosphere is determined by the very same quantity ![]() . In reality, the temperature is not constant throughout the atmosphere and decreases quite rapidly with altitude, because of radiative cooling. As a result, the concentration profile will fall off with altitude even more rapidly as the isothermal,

. In reality, the temperature is not constant throughout the atmosphere and decreases quite rapidly with altitude, because of radiative cooling. As a result, the concentration profile will fall off with altitude even more rapidly as the isothermal, ![]() result would suggest. In any event, we arrive at a somewhat surprising result that the thickness of the atmosphere is determined not by the molecule’s size—consisent with the molecules not being in contact much of the time—but by how fast the molecules move!

result would suggest. In any event, we arrive at a somewhat surprising result that the thickness of the atmosphere is determined not by the molecule’s size—consisent with the molecules not being in contact much of the time—but by how fast the molecules move!

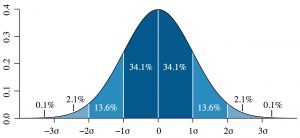

We have already written down the velocity dependent part of the distribution (23) of velocities, i.e. the Maxwell distribution, for 1D motion. Its functional form is that of so called Gaussian distribution (named after the mathematician Gauss):

(55) ![]()

with ![]() and

and ![]() . The distribution in Eq. (55) is also sometimes called the normal distribution and is incredibly important for this Course. You can convince yourself that the mean of the Gaussian distribution is given by

. The distribution in Eq. (55) is also sometimes called the normal distribution and is incredibly important for this Course. You can convince yourself that the mean of the Gaussian distribution is given by

(56) ![]()

and variance

(57) ![]()

The variance ![]() is also sometimes called the mean square deviation (msd), while its square root:

is also sometimes called the mean square deviation (msd), while its square root:

(58) ![]()

is called the root mean square deviation (rmsd), or the standard deviation. According to Eq. (57), the rmsd of the Gaussian distribution is numerically equal to the parameter ![]() , a very simple result. As can be seen in the picture below, the rmsd is a good measure of the width of the Gaussian distribution:

, a very simple result. As can be seen in the picture below, the rmsd is a good measure of the width of the Gaussian distribution:

(By M. W. Toews. Own work, based (in concept) on figure by Jeremy Kemp, on 2005-02-09, CC BY 2.5, Link)

Indeed, we see that the ![]() interval centered at the maximum

interval centered at the maximum ![]() of the distribution contains 68.2%, or so, of the data. A formal way to express this notion is:

of the distribution contains 68.2%, or so, of the data. A formal way to express this notion is: ![]() .

.

Now, we note that the mean velocity in all directions is zero:

(59) ![]()

This was obvious beforehand since the gas, as a whole, is not moving anywhere.

We are now ready to write the velocity distribution for motion in 3D. Because the contributions of the translations in the three spatial directions to the total energy are additive:

(60) ![]()

the overall distribution for the three components ![]() ,

, ![]() , and

, and ![]() is determined by simply multiplying together the respective three distributions:

is determined by simply multiplying together the respective three distributions:

(61) ![]()

Note this distribution is isotropic, i.e., does not depend on the direction of the velocity vector, but only on its length. This is expected from the fact that all directions in space are equivalent, in the absence of external forces, and that the distributions of coordinates and velocities are decoupled in equilibrium, as already discussed. The above equation is also consistent with the lack of a velocity dependent pre-exponential factor in Eq. (23). If present, the resulting 3D distribution would not be isotropic! The isotropic nature of the velocity distribution was the key component of Maxwell’s original argument.

A good way of thinking about the distribution (61) is that the partial amount of particles whose velocities fall within the parallelepiped of dimensions ![]() centered at the velocity

centered at the velocity ![]() is equal to:

is equal to:

(62) ![]()

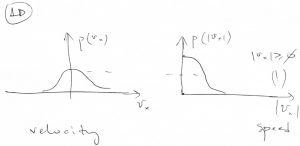

It is often convenient to ask, instead, the question: what is the distribution of speeds, not velocities? To answer this question, we note that all of the parallelepipeds contributing to a specific value of speed ![]() comprise a spherical shell of some thickness

comprise a spherical shell of some thickness ![]() centered at the origin. The volume of such a spherical shell is

centered at the origin. The volume of such a spherical shell is ![]() . Hence the distribution of the speed is

. Hence the distribution of the speed is

(63) ![]()

yielding

(64) ![]()

Compare this with the distribution of speeds in 1D, which is obtained by simply “folding” together the negative and positive wings of the velocity distribution, since both ![]() and

and ![]() imply the same speed

imply the same speed ![]() :

:

(65) ![]()

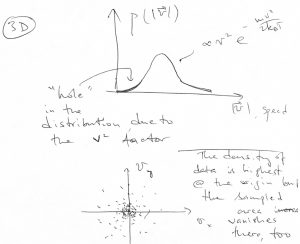

Note the 3D speed distribution has a quadratic “hole” at the origin:

Likewise, the speed distribution will have a linear “hole” of the form ![]() .

.

The “hole” comes about despite the value ![]() being the most likely value of the velocity. There is also a degeneracy of sorts, since in 3D, a whole shell of area

being the most likely value of the velocity. There is also a degeneracy of sorts, since in 3D, a whole shell of area ![]() contibutes to the same value of

contibutes to the same value of ![]() .

.

(In 2D, the amount of degeneracy goes with the ring length ![]() .)

.)

Bonus discussion:

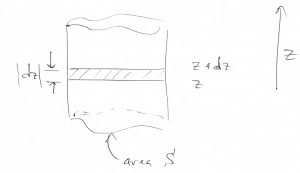

There is a rather vivid way to derive the Boltzmann distribution. Imagine a gas subject to gravity:

On the one hand, the pressure difference between the altitudes ![]() and

and ![]() should be exactly compensated by the weight of the gas contained within the layer:

should be exactly compensated by the weight of the gas contained within the layer:

![]()

where ![]() is the volume of the shaded region. Dividing by

is the volume of the shaded region. Dividing by ![]() and taking the

and taking the ![]() limit yields

limit yields

![]()

Yet according to the ideal gas law ![]() , and so

, and so

![]()

Solving the differential equation yields:

![]()

.

Lastly, we have seen that there are two ways to think about thermal phenomena. Mathematicians prefer to think in terms of probabilities. When two events are uncorrelated, the probability of the compound event factorizes: ![]() . Chemists and physicists often prefer to think in terms of energies or energy-like quantities. In this case, statistical independence, or lack of interaction between events reveals itself through additivity of energies. The two ways are essentially equivalent, the connection understood through the properties of the mathematical functions

. Chemists and physicists often prefer to think in terms of energies or energy-like quantities. In this case, statistical independence, or lack of interaction between events reveals itself through additivity of energies. The two ways are essentially equivalent, the connection understood through the properties of the mathematical functions ![]() and

and ![]() :

: ![]() or, equivalently,

or, equivalently, ![]() .

.