7 Gibbs Free Energy, Entropy of Mixing, Enthalpy, Chemical Potential, Gibbs-Duhem

Next we determine the thermodynamic potential that controls the equilibration of the volume, in addition to controlling the equilibrium value of the energy. In many, of not most cases of interest, the volume of the system cannot be rigidly controlled. Instead, we can only be sure of the value of the pressure. For instance, most liquids do not fill their container fully, and so even if the container is completely rigid (which is an idealization) and fully sealed, the liquid will occupy only a portion of the container, the rest occupied by its vapor or the corresponding crystal. In an open container, clearly one can only control the pressure.

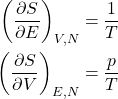

Perhaps the easiest way to determine the pertinent thermodynamic potential is to first write down the energy conservation law in a form that brings out the energy and volume dependence of the entropy:

(1) ![]()

We are mindful that the above equation pertains to the equilibrium values of all the quantities. Thus in equilibrium,

(2)

and, hence,

(3) ![]()

(4) ![]()

Note we have explicitly indicated that the particle number ![]() is being kept constant, which will be of use later one.

is being kept constant, which will be of use later one.

Recall that Eq. 3 resulted from minimizing the function ![]() with respect to

with respect to ![]() . It is not hard to see that Eq. 3 results just as well from minimization with respect to

. It is not hard to see that Eq. 3 results just as well from minimization with respect to ![]() of the following function

of the following function

(5) ![]()

At the same time, we can readily convince ourselves that minimizing this function with respect to ![]() also yields Eq. 4! To be clear, the parameters

also yields Eq. 4! To be clear, the parameters ![]() and

and ![]() are kept constant during the minimization as well. The function

are kept constant during the minimization as well. The function ![]() , then, represents the sought thermodynamic potential governing the fluctuations of both the energy and volume when the temperature and pressure are externally imposed. Just as for the thermodynamic potential

, then, represents the sought thermodynamic potential governing the fluctuations of both the energy and volume when the temperature and pressure are externally imposed. Just as for the thermodynamic potential ![]() , there are two ways to interpret Eq. 2: On the one hand, they tell us the values of temperature and pressure that are needed to achieve specific equilibrium values for the energy and volume. On the other hand, given specific values of externally imposed temperature and pressure, the equilibrium energy and volume correspond to the minimum of the function

, there are two ways to interpret Eq. 2: On the one hand, they tell us the values of temperature and pressure that are needed to achieve specific equilibrium values for the energy and volume. On the other hand, given specific values of externally imposed temperature and pressure, the equilibrium energy and volume correspond to the minimum of the function ![]() , where Eq. 2 expresses the formal condition for the location of the minimum on the

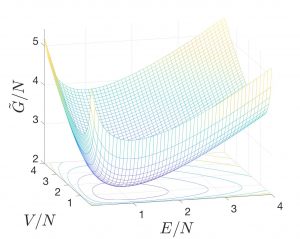

, where Eq. 2 expresses the formal condition for the location of the minimum on the ![]() surface. As an example, we show the

surface. As an example, we show the ![]() surface for the ideal gas:

surface for the ideal gas:

where we used the expression

(6) ![]()

obtained by taking the ![]() dependence derived in the last Chapter:

dependence derived in the last Chapter:

(7) ![]()

and substituting ![]() and

and ![]() . Note we must use the entropy as a function of energy, volume, and particle number

. Note we must use the entropy as a function of energy, volume, and particle number ![]() for both

for both ![]() and

and ![]() ! In the graph, we set

! In the graph, we set ![]() and

and ![]() and note that the precise choice of

and note that the precise choice of ![]() ,

, ![]() , and

, and ![]() affects only the vertical, but not the lateral position of the graph. Thus, the positions of the minima are not affected. Lastly, it is convenient to graph extensive quantities per particle.

affects only the vertical, but not the lateral position of the graph. Thus, the positions of the minima are not affected. Lastly, it is convenient to graph extensive quantities per particle.

In the situation where ![]() and

and ![]() stand for externally imposed temperature and pressure and we are considering fluctuations of energy and volume, it is easy to see that such fluctuations will be subject to a restoring force if the curvature of the

stand for externally imposed temperature and pressure and we are considering fluctuations of energy and volume, it is easy to see that such fluctuations will be subject to a restoring force if the curvature of the ![]() surface is positive throughout. We have already discussed the stability with respect to energy fluctuations in the preceding Chapter and postpone further discussion of the necessary criteria until the next Chapter.

surface is positive throughout. We have already discussed the stability with respect to energy fluctuations in the preceding Chapter and postpone further discussion of the necessary criteria until the next Chapter.

Similarly to how we defined the Helmholtz free energy as the minimum of the ![]() potential, we now define the Gibbs free energy as the minimum of the

potential, we now define the Gibbs free energy as the minimum of the ![]() function:

function:

(8) ![]()

or, simply,

(9) ![]()

where it is understood that every quantity is at its equilibrium value and, as such, is a function of exactly three independent quantities. A natural—but not unique!—choice of the variables is ![]() ,

, ![]() , and

, and ![]() .

.

We are now ready to formulate yet another version of the 2nd Law of Thermodynamics, i.e, that at constant pressure and temperature, a large system will spontaneously and irreversibly relax so as to minimize its Gibbs free energy. Once equilibrated, the system will remain in equilibrium indefinitely. This form of the 2nd Law is particularly useful because ![]() conditions are particularly common in experiment.To avoid confusion, we point out that although the equilibration of the system is all but inevitable, the 2nd Law, by itself, does not provide guidance as to the kinetics of the equilibration. These kinetics may, in fact, be rather slow. For instance, the stable form of solid carbon at normal conditions is graphite, not diamond. Yet diamond is incredibly stable, as we all know. Likewise, liquid glycerol can be stored on the shelf for decades, at normal conditions, yet its stable form at normal conditions is a crystalline solid!

conditions are particularly common in experiment.To avoid confusion, we point out that although the equilibration of the system is all but inevitable, the 2nd Law, by itself, does not provide guidance as to the kinetics of the equilibration. These kinetics may, in fact, be rather slow. For instance, the stable form of solid carbon at normal conditions is graphite, not diamond. Yet diamond is incredibly stable, as we all know. Likewise, liquid glycerol can be stored on the shelf for decades, at normal conditions, yet its stable form at normal conditions is a crystalline solid!

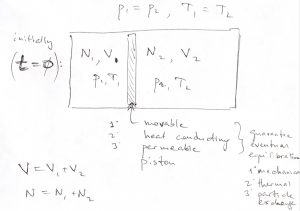

As a simple yet useful illustration of the utility of the ![]() form of the 2nd Law, let us convince ourselves that gaseous mixtures essential never de-mix. Imagine two distinct, nearly ideal gases occupying two sides of a container separated by a movable, thermally conducting partition. The partition is also weakly permeable to both gases. Note these properties guarantee eventual equilibration: mechanical, thermal, and with respect to particle exchange. Suppose the gases occupy volumes

form of the 2nd Law, let us convince ourselves that gaseous mixtures essential never de-mix. Imagine two distinct, nearly ideal gases occupying two sides of a container separated by a movable, thermally conducting partition. The partition is also weakly permeable to both gases. Note these properties guarantee eventual equilibration: mechanical, thermal, and with respect to particle exchange. Suppose the gases occupy volumes ![]() and

and ![]() and are maintained at the same temperature and pressure.

and are maintained at the same temperature and pressure.

Thus, according to the ideal gas law, the particle numbers must be proportional to the respective volumes:

(10) ![]()

Thus the mole fractions of the two gases are, respectively

(11) ![]()

and

(12) ![]()

Note ![]() .

.

Clearly, both the thermal and mechanical equilibrium are in place, but the system is not in full equilibrium: Since the concentrations of each gas differ on the opposite sides of the partition, there will be uncompensated fluxes of both kinds of particles until the mixture will have the same composition in both parts of the container. No quantity in Eq. 9 will change as a result of the particle exchange except for one: the entropy. As a result of the mixing, the volume of gas 1 will increase from ![]() to

to ![]() and the volume of gas 2 will increase from

and the volume of gas 2 will increase from ![]() to

to ![]() . The resulting entropy change is, then,

. The resulting entropy change is, then,

(13) ![Rendered by QuickLaTeX.com \begin{eqnarray*} \Delta S_\text{mix}/k_B &= &\left[ N_1 \ln\left(\frac{V_1+V_2}{V^\ominus}\right) \,+\, N_2 \ln\left(\frac{V_1+V_2}{V^\ominus}\right) \right] \\ &-& \left[ N_1 \ln\left(\frac{V_1}{V^\ominus}\right) \,+\, N_2 \ln\left(\frac{V_2}{V^\ominus}\right) \right] \\ &=& \left[ N_1 \ln\left(\frac{V_1+V_2}{V_1}\right) \,+\, N_2 \ln\left(\frac{V_1+V_2}{V_2}\right) \right] \\ &=& (N_1+N_2)\left[ \frac{N_1}{N_1+N_2} \ln\left(\frac{V_1+V_2}{V_1}\right) \,+\, \frac{N_2}{N_1+N_2} \ln\left(\frac{V_1+V_2}{V_2}\right) \right] \end{eqnarray*}](https://uhlibraries.pressbooks.pub/app/uploads/quicklatex/quicklatex.com-176c543c5a21fa6f5b5480c8b5449384_l3.png)

In terms of the mole fraction ![]() and the total particle number

and the total particle number ![]() , this expression looks particularly appealing:

, this expression looks particularly appealing:

(14) ![]()

One can likewise derive that for an arbitrary mixture of ideal gases,

(15) ![]()

a celebrated formula, due to Gibbs.

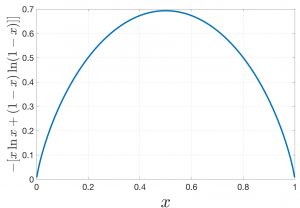

One can easily convince oneself that the mixing entropy is always positive, which we will do here graphically for the binary mixture from Eq. 14 while remembering that ![]() . The mixing entropy vanishes only for pure substances,

. The mixing entropy vanishes only for pure substances, ![]() or

or ![]() , of course and is maxed out at

, of course and is maxed out at ![]() per particle for an equal mixture

per particle for an equal mixture ![]() :

:

The corresponding Gibbs free energy change is, then, always negative:

(16) ![]()

To appreciate just how unlikely de-mixing would be, we compare the probability of the mixed vs. de-mixed state. As in the preceding Chapter, the ratio of the numbers of accessible states is given by the exponential of the free energy times ![]() , the pertinent free energy being is that due to Gibbs, because we are keeping fixed temperature and pressure:

, the pertinent free energy being is that due to Gibbs, because we are keeping fixed temperature and pressure:

(17) ![]()

For one mole worth of a 50/50 mixture this would yield ![]() , a grotesquely large number. To put this in perspective, I quote here the well known French mathematician Borel (by way of G.N.Lewis’s book “The anatomy of Science”): “Imagine a million monkeys allowed to play upon the keys of a million typewriters. What is the chance that this wanton activity should reproduce exactly all of the volumes which are contained in the library of the British Museum? It certainly is not a large chance, but it may be roughly calculated, and proves in fact to be considerably larger than the chance that a mixture of oxygen and nitrogen will separate into the two pure constituents.” (Eq. (17) is an application of the more general formula

, a grotesquely large number. To put this in perspective, I quote here the well known French mathematician Borel (by way of G.N.Lewis’s book “The anatomy of Science”): “Imagine a million monkeys allowed to play upon the keys of a million typewriters. What is the chance that this wanton activity should reproduce exactly all of the volumes which are contained in the library of the British Museum? It certainly is not a large chance, but it may be roughly calculated, and proves in fact to be considerably larger than the chance that a mixture of oxygen and nitrogen will separate into the two pure constituents.” (Eq. (17) is an application of the more general formula ![]() for the relative probability of two thermodynamic (not microscopic) states 1 and 2 at the same temperature and pressure. Here we write down the latter formula by analogy with Eq. (53) of Chapter 6. To systematically derive it, one has to generalize the derivation of the Gibbs-Boltzmann distribution so as to keep track of both the energy and the volume of a microstate. Like the energies, the volumes of independent systems simply add.)

for the relative probability of two thermodynamic (not microscopic) states 1 and 2 at the same temperature and pressure. Here we write down the latter formula by analogy with Eq. (53) of Chapter 6. To systematically derive it, one has to generalize the derivation of the Gibbs-Boltzmann distribution so as to keep track of both the energy and the volume of a microstate. Like the energies, the volumes of independent systems simply add.)

Now, the aforementioned choice of the quantities ![]() ,

, ![]() , and

, and ![]() as the arguments of

as the arguments of ![]() is natural not only in view of the definition of the Gibbs energy as the minimum of the

is natural not only in view of the definition of the Gibbs energy as the minimum of the ![]() function at fixed

function at fixed ![]() ,

, ![]() , and

, and ![]() , but also because of the fact that the partial derivative of

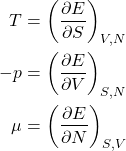

, but also because of the fact that the partial derivative of ![]() w.r.t. these variables are particularly simple. To discuss this point in a more general way, let us revisit energy conservation while including the possibility that the particle number is allowed to change. For this, let us define a new quantity, called the chemical potential. The chemical potential is the energy cost of adding a particle to the system while no heat exchange takes place and no mechanical work is done:

w.r.t. these variables are particularly simple. To discuss this point in a more general way, let us revisit energy conservation while including the possibility that the particle number is allowed to change. For this, let us define a new quantity, called the chemical potential. The chemical potential is the energy cost of adding a particle to the system while no heat exchange takes place and no mechanical work is done: ![]() . We note that

. We note that ![]() . Under the assumption that the energy is a smooth function of the particle number

. Under the assumption that the energy is a smooth function of the particle number ![]() , this ratio can be re-written as the following derivative:

, this ratio can be re-written as the following derivative:

(18) ![]()

(There are certain issues with treating the intrinsically discrete variable ![]() as continuous, but they can be addressed systematically, see the Bonus and Advanced Discussions at the end of this Chapter.)

as continuous, but they can be addressed systematically, see the Bonus and Advanced Discussions at the end of this Chapter.)

We can now write the energy conservation for systems with a variable particle number:

(19) ![]()

or, equivalently,

(20)

Note that at this point, we are still considering pure systems, i.e., those containing just one chemical species. Mixtures will be considered in due time.

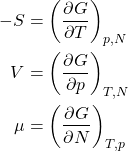

Thus for the increment of the Gibbs free energy we obtain

(21) ![]()

which leads, in view of Eq. 19, to

(22) ![]()

or, equivalently,

(23)

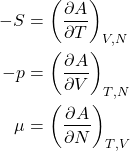

Likewise, the increment of the Helmholtz energy, ![]() , for a system with a variable particle number becomes

, for a system with a variable particle number becomes

(24) ![]()

or, equivalently,

(25)

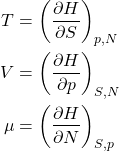

And, finally we introduce a new function, called the enthalpy, or the heat function:

(26) ![]()

Since ![]() , one obtains

, one obtains

(27) ![]()

or, equivalently,

(28)

The increments of all four energy functions given above display a clear pattern: They all have simple-looking derivatives for certain choices of independent parameters, as summarized in the Table below:

Other choices of variables are possible so long as

- The number of the variables is exactly three.

- At least one of those three variables corresponds to an extensive quantity. This is needed because each one of the four energies is an extensive quantity itself and so at least one of the variables must contain information as to the size of the system.

The converse of the item 2 is worth elaborating on: If expressed per particle, each of the four energies no longer depends on ![]() and, thus, is a function of exactly two variables. Both of these remaining variables cannot contain information about the system size and, thus, must be intensive. Now let us take every item in the 1st column of the table above and re-write it per particle. In those cases where one or both of the arguments are extensive variables, we must replace them with their intensive counterparts per particle:

and, thus, is a function of exactly two variables. Both of these remaining variables cannot contain information about the system size and, thus, must be intensive. Now let us take every item in the 1st column of the table above and re-write it per particle. In those cases where one or both of the arguments are extensive variables, we must replace them with their intensive counterparts per particle:

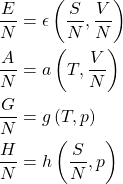

(29)

One immediately notices that of the four energies, the Gibbs energy has the simplest ![]() dependence:

dependence:

(30) ![]()

and so

(31) ![]()

comparing this result with the bottom equation from Eq. 23, one immediately obtains ![]() . In view of Eq. 30 this lead to the remarkable result that the chemical potential is, in fact, the Gibbs free energy per particle!

. In view of Eq. 30 this lead to the remarkable result that the chemical potential is, in fact, the Gibbs free energy per particle!

(32) ![]()

It is also clear from the above discussion that only two intensive variables are independent, while any other intensive property can be expressed as their function. A useful instance of such a functional relation can be obtained by noticing that ![]() and subtracting

and subtracting ![]() from Eq. 22. This yields what is known as the Gibbs-Duhem equation:

from Eq. 22. This yields what is known as the Gibbs-Duhem equation:

(33) ![]()

It becomes particularly lucid when written our in terms of quantities per particle, which is accomplished by dividing it by the particle number:

(34) ![]()

where ![]() is the specific entropy and

is the specific entropy and ![]() specific volume. Every quantity in the above equation is intensive. Thus we obtain

specific volume. Every quantity in the above equation is intensive. Thus we obtain ![]() etc., as just advertised.

etc., as just advertised.

Why do we call enthalpy the “heat function”? Because it directly reflects the amount of exchanged heat during an isobaric process. (“Isobaric” means “at constant pressure”.) Indeed, per Eq. 27,

(35) ![]()

If, in addition, the temperature is kept constant as well, one can easily integrate this to obtain

(36) ![]()

(Clearly, the system must change its volume during such a process!)

Another consequence of Eq. 35 is a useful expression for the heat capacity at constant pressure:

(37) ![]()

This indicates that the enthalpy is essentially the constant-pressure analog of the energy.

Finally, one may also write down simple relations between the thermodynamic functions, such as

(38) ![]()

or

(39) ![]()

As an illustration of the utility of these and earlier expressions, let us determine the expression for the chemical potential of a single-component ideal gas. First re-write Eq. 19 as

(40) ![]()

and so

(41) ![]()

To use this formula, we must express the entropy as a function of the three variables ![]() ,

, ![]() , and

, and ![]() exclusively. (This means that any other quantity that could be involved in the available expression must be expressed through those three variables.) Just such an expression is available in Eq. 6. Differentiating it w.r.t.

exclusively. (This means that any other quantity that could be involved in the available expression must be expressed through those three variables.) Just such an expression is available in Eq. 6. Differentiating it w.r.t. ![]() , while keeping

, while keeping ![]() and

and ![]() fixed, yields:

fixed, yields:

(42) ![Rendered by QuickLaTeX.com \begin{eqnarray*} -\frac{\mu}{T} &=& c_V \left[ \ln \left( \frac{E}{N c_V T^\ominus} \right) - 1 \right] +k_B \ln \left( \frac{V}{V^\ominus} \right) \\ &=& k_B \ln \left[ \left( \frac{E}{e N c_V T^\ominus} \right)^{c_V/k_B} \left( \frac{V}{V^\ominus} \right) \right] \end{eqnarray*}](https://uhlibraries.pressbooks.pub/app/uploads/quicklatex/quicklatex.com-744827ea730c7b0e87a948b38036bb86_l3.png)

Here we use that ![]() , where

, where ![]() , and

, and ![]() . Substituting

. Substituting ![]() , one obtains a compact expression:

, one obtains a compact expression:

(43) ![Rendered by QuickLaTeX.com \begin{equation*} \mu \:=\: - k_B T \ln \left[ \left( \frac{T}{e T^\ominus} \right)^{c_V/k_B} \left( \frac{V}{V^\ominus} \right) \right] \end{equation*}](https://uhlibraries.pressbooks.pub/app/uploads/quicklatex/quicklatex.com-ee557b403314065a6ac9e53c0caddb07_l3.png)

which, again, emphasizes the role of ![]() as the basic thermal energy scale for a particle, but modified to account for specific conditions. The above expression can be re-written for other combinations of parameters, using the equation of state. It can be also used to write down expressions for the thermodynamic functions. And so, for instance, for a process conserving the particle number, one gets

as the basic thermal energy scale for a particle, but modified to account for specific conditions. The above expression can be re-written for other combinations of parameters, using the equation of state. It can be also used to write down expressions for the thermodynamic functions. And so, for instance, for a process conserving the particle number, one gets

(44) ![]()

The corresponding Helmholtz free energy is, by virtue of Eq. 38:

(45) ![]()

Bonus Discussion. Formulas up to Eq.(45) were derived having in mind processes that conserve particles. Some care is needed to use them for processes that do involve changes in ![]() . Processes that do not conserve particle numbers are central in Chemistry: Reactants and products inter-convert while the reaction itself exchanges particles with the environment via evaporation, condensation/solvation of airborne gasses, precipitation of poorly solvable species, etc. In contrast with changes in energy and volume, which can be made arbitrarily small, changes in the particle number

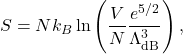

. Processes that do not conserve particle numbers are central in Chemistry: Reactants and products inter-convert while the reaction itself exchanges particles with the environment via evaporation, condensation/solvation of airborne gasses, precipitation of poorly solvable species, etc. In contrast with changes in energy and volume, which can be made arbitrarily small, changes in the particle number ![]() are intrinsically discrete. Thus, while two states with different volumes, for instance, can be made arbitrarily similar, two states with different particle numbers may be similar, but are strictly distinct. Cases when a quantity is intrinsically discrete—or “quantized”—must dealt using Quantum Mechanics. Here, we can weasel our way of this apparent complication by using a reference state in Eqs. (7) that does not explicitly specify the particle number:

are intrinsically discrete. Thus, while two states with different volumes, for instance, can be made arbitrarily similar, two states with different particle numbers may be similar, but are strictly distinct. Cases when a quantity is intrinsically discrete—or “quantized”—must dealt using Quantum Mechanics. Here, we can weasel our way of this apparent complication by using a reference state in Eqs. (7) that does not explicitly specify the particle number:

(46) ![]()

where the quantity ![]() is the specific volume of the reference state. It can be, of course, expressed through the reference temperature and pressure

is the specific volume of the reference state. It can be, of course, expressed through the reference temperature and pressure ![]() and I remind that we are working with the ideal gas. Let us evaluate the entropy change due to a change in the particle number, at constant volume and temperature:

and I remind that we are working with the ideal gas. Let us evaluate the entropy change due to a change in the particle number, at constant volume and temperature:

(47) ![]()

To find out how this rather complicated expression comes about, the reader is invited to read the rest of the Chapter.

Advanced Discussion.

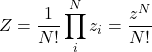

There would seem to be nothing special about processes that do not conserve the particle number. Perhaps the simplest example of such a process is a leaky container. Yet a lack of particle conservation, if any, has a fascinating statistical aspect: it allows one, in principle, to label particles that are otherwise identical. Indeed, imagine a leaky container that typically lets out one particle at a time. An escaped particle can thus be singled out and labeled by its location, because the latter location is outside the container. In contrast, we had built our description on the assumption that particles inside the container are strictly identical and exchange places faster than any relevant experimental time scale, thus preventing one from labeling the particles using their location. To “patch” this apparent limitation of assuming intrinsic indistinguishability of chemically identical particles when the particle number is not conserved, we first note that making a small hole in the container automatically breaks the translational symmetry of space: Those particles that are the closest to the hole are different from the rest because they are the likeliest to escape first; the corresponding region that is likeliest to contribute a particle has a volume comparable to that occupied by one particle, on the average. Consequently we must concede that the volume relevant for statistics of identical particles is not the total volume but, instead, the specific volume, i.e., the volume per particle. In other words, a particle can be labeled—and thus distinguished from the other particles—so long as it is contained within a region comparable to the specific volume. Conversely, if a volume contains more than one particle, the particles lose their identities. As an informal example, think of two identical twins in one room. So long as you know the two are confined to two separate parts of the room, you can label the twins by their locations. If, instead, the twins move about, such labeling is no longer possible. The above notions—which underlie the famous Maxwell demon paradox, among other things—can be addressed systematically in Quantum Mechanics, see the last Chapter of these Notes. Here, we will limit ourselves to the simplest argument which already suffices for nearly ideal gases. The Helmholtz free energy is computed according to ![]() , where

, where ![]() is the partition function. Let us first consider an ideal gas made up of

is the partition function. Let us first consider an ideal gas made up of ![]() distinct particles. The total number of thermally available states for our compound system is simply the product of the numbers of states available to the subsystems:

distinct particles. The total number of thermally available states for our compound system is simply the product of the numbers of states available to the subsystems: ![]() . If the particles are, instead, indistinguishable, the latter expression overcounts the number of available states by the number of possible permutations of the particles since all configurations obtained using such permutations are physically equivalent. (This is assuming no two particles are in the same quantum state, something we do not have to worry about at low densities such that the gas behaves as nearly ideal. Informally speaking, one cannot permute two particles that occupy the same spot.) Since the number of permutations of

. If the particles are, instead, indistinguishable, the latter expression overcounts the number of available states by the number of possible permutations of the particles since all configurations obtained using such permutations are physically equivalent. (This is assuming no two particles are in the same quantum state, something we do not have to worry about at low densities such that the gas behaves as nearly ideal. Informally speaking, one cannot permute two particles that occupy the same spot.) Since the number of permutations of ![]() objects is equal to

objects is equal to ![]() , a good approximation for the total number of thermally available states is given by

, a good approximation for the total number of thermally available states is given by

(48)

where ![]() is the partition function—i.e. the number of thermally available states—for an individual particle, if standalone. The partition function for an individual monatomic molecule, according to quantum mechanics, is given by

is the partition function—i.e. the number of thermally available states—for an individual particle, if standalone. The partition function for an individual monatomic molecule, according to quantum mechanics, is given by

(49) ![]()

where the quantity

(50) ![]()

is the so called de Broglie wavelength. This quantity gives the localization length of a particle at thermal energy. In a sense, the partition function (49) provides the number of distinct spots a particle in equilibrium with its environment can occupy. Using the Stirling approximation, ![]() , which is valid for

, which is valid for ![]() , we obtain for a monatomic gas:

, we obtain for a monatomic gas:

(51) ![]()

and so, finally, we obtain that the volume entering the expression for the Helmholtz free energy is the specific volume, not the total volume, as anticipated earlier:

(52) ![]()

While this circumstance does not affect the value of the pressure ![]() , it does affect the value of the entropy

, it does affect the value of the entropy

(53) ![]()

where we used

(54) ![]()

(Formula ![]() can be used as well but is somewhat less lucid). This yields for the entropy:

can be used as well but is somewhat less lucid). This yields for the entropy:

(55)

for a monatomic ideal gas. Juxtaposition of expressions (51), (54) and (55) confirms that the statistical effect of the particles being indistinguishable, which results in the appearance of the specific volume in the free energy is exclusively of entropic nature. These expressions can be readily generalized to cases when the particles have internal degrees of freedom such as rotations and vibrations. This will lead to modification of the temperature-dependence of the expressions, but not their dependence on the volume since internal degrees of the molecule are largely decoupled from the location of the molecule in space.

Expressions (51) and (55) can be used to compute, respectively, free energy and entropy changes for processes that do not conserve the particle number.